Event Stream Processing

Event stream processing (ESP) is the practice of taking action on a series of data points that originate from a system that continuously creates data. The term “event” refers to each data point in the system, and “stream” refers to the ongoing delivery of those events. A series of events can also be referred to as “streaming data” or “data streams.” Actions that are taken on those events include aggregations (e.g., calculations such as sum, mean, standard deviation), analytics (e.g., predicting a future event based on patterns in the data), transformations (e.g., changing a number into a date format), enrichment (e.g., combining the data point with other data sources to create more context and meaning), and ingestion (e.g., inserting the data into a database).

Event stream processing is often viewed as complementary to batch processing. Batch processing is about taking action on a large set of static data (“data at rest”), while event stream processing is about taking action on a constant flow of data (“data in motion”). Event stream processing is necessary for situations where action needs to be taken as soon as possible. This is why event stream processing environments are often described as “real-time processing.”

There are many related and synonymous terms pertaining to event stream processing. For example, stream processing is considered an equivalent term. “Complex event processing” (CEP) is also viewed as mostly synonymous, though it typically implies an older class of technologies in contrast to newer, high-speed technologies that are popular today. The term “message” is often used to refer to events and thus the entire system can be referred to as a “messaging system” (though that obviously introduces confusion with the technologies that allow end-users to chat online with each other).

How Does Event Stream Processing Work?

Event stream processing works by handling a data set by one data point at a time. Rather than view data as a whole set, event stream processing is about dealing with a flow of continuously created data. This requires a specialized set of technologies.

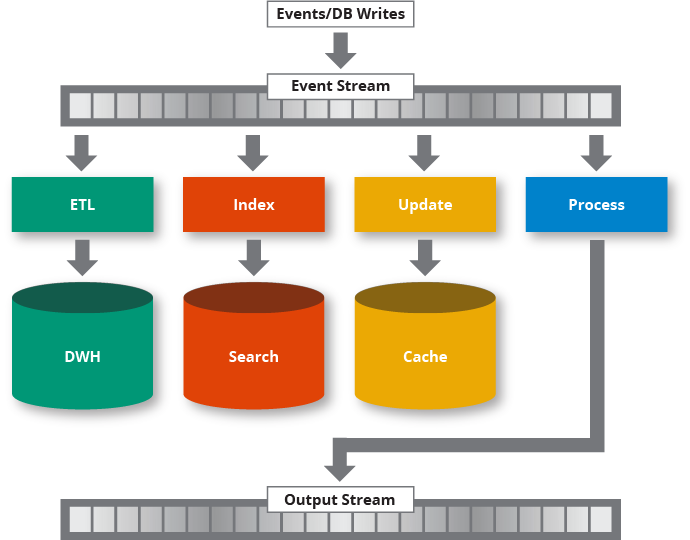

In an event stream processing environment, there are two main classes of technologies: 1) the system that stores the events, and 2) the technology that helps developers write applications that take action on the events. The former component pertains to data storage, and stores data based on a timestamp. For example, you might capture outside temperature every minute of the day and treat that as an event stream. Each “event” is the temperature measurement accompanied by the exact time of the measurement. This is often handled by technology such as Apache Kafka. The latter (known as “stream processors” or “stream processing engines”) is truly the “event stream processing” component and lets you take action on the incoming data. A variety of processor options are available in the market today. Though most stream processors appear similar in capabilities, the in-memory stream processors stand out because of their ability to process large amounts of streaming data very quickly. Hazelcast Jet, for example, is able to read a significant amount of data and process all of it in-memory, making it especially useful for environments where extremely fast responsiveness is critical.

Example Use Cases

Use cases such as payment processing, fraud detection, anomaly detection, predictive maintenance, and IoT analytics all rely on immediate action on data. All of these use cases deal with data points in a continuous stream, each associated with a specific point in time. These are classic event stream processing examples because the order and timing of the data points help with identifying patterns and trends that represent an important insight for users.

Event stream processing is also valuable when data granularity is critical. For example, the actual changes to a stock price are often more important to a trader than the stock price itself, and event stream processing lets you track all the changes along the way to make better trading decisions. The practice of change data capture (CDC), in which all individual changes to a database are tracked, is another event stream processing use case. In CDC, downstream systems can use the stream of individual updates to a database for purposes such as identifying usage patterns that can help define optimization strategies, as well as tracking changes for auditing requirements.