Micro Batch Processing

Micro-batch processing is the practice of collecting data in small groups (“batches”) for the purposes of taking action on (processing) that data. Contrast this to traditional “batch processing,” which often implies taking action on a large group of data. Micro-batch processing is a variant of traditional batch processing in that the data processing occurs more frequently so that smaller groups of new data are processed. In both micro-batch processing and traditional batch processing, data is collected based on a predetermined threshold or frequency before any processing occurs.

In the traditional world of data, workloads were predominantly batch-oriented. That is, we collected data in groups, then cleansed and transformed the data before loading it to a repository such as a data warehouse to feed canned reports on a daily, weekly, monthly, quarterly, and annual basis. This cycle was sufficient when most companies predominantly interacted with customers in the physical world.

As the world has become more digital and “always-on,” and our interactions continuously generate vast volumes of data, enterprises adopted new technological approaches to build and maintain a competitive advantage by driving more immediate, personalized interactions. These approaches were micro-batch processing, then stream processing.

How does micro-batch processing work?

Micro-batch processing accelerated the cycle so data could be loaded much more frequently, sometimes in increments as small as seconds. Micro-batch loading technologies include Fluentd, Logstash, and Apache Spark Streaming.

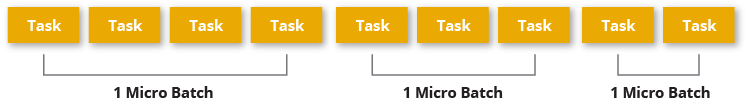

Micro-batch processing is very similar to traditional batch processing in that data are usually processed as a group. The primary difference is that the batches are smaller and processed more often. A micro-batch may process data based on some frequency – for example, you could load all new data every two minutes (or two seconds, depending on the processing horsepower available). Or a micro-batch may process data based on some event flag or trigger (the data is greater than 2 megabytes or contains more than a dozen pieces of data, for example).

Though it is not true real-time processing, micro-batch processing initially sufficed to support some “real-time” use cases in which data does not need to be up-to-the-moment accurate. For example, you may have corporate dashboards that refresh every 15 minutes or hourly. Or you may collect server logs in small regular intervals for historical record-keeping rather than for true real-time use cases such as intrusion detection.

Micro-batch processing vs stream processing

The world has accelerated, and there are many use cases for which micro-batch processing is simply not fast enough. Organizations now typically only use micro-batch processing in their applications if they have made architectural decisions that preclude stream processing. For example, an Apache Spark shop may use Spark Streaming, which is – despite its name and use of in-memory compute resources – actually a micro-batch processing extension of the Spark API.

Now, stream processing technologies are becoming the go-to for modern applications. As data has accelerated throughout the past decade, enterprises have turned to real-time processing to respond to data closer to the time at which it is created to solve for a variety of use cases and applications. In some cases, micro-batch processing was “real-time enough,” but increasingly organizations have recognized that stream processing that leverages in-memory technology – either in the cloud or on-premises – is the ideal solution.

Stream processing allows applications to respond to new data events at the moment they are created. Rather than grouping data and collecting it at some predetermined interval, stream processing systems collect and process data immediately as they are generated.

Stream Processing Example Use Cases

While the most aggressive of developers measure the performance of micro-batch processing technologies in milliseconds – for example, the recommended lower end of Spark Streaming batch intervals is 50 milliseconds because of associated overhead costs – developers measure the performance of stream processing in single-digit milliseconds. For example, in this blog post on streaming technology for retail banks, the author explains how a major credit card processor executes a core data collection and processing workflow for fraud detection in 7 milliseconds.

Applications that incorporate stream processing can respond to new data immediately as it is generated rather than after data crosses a predefined threshold. This is a critical capability for use cases such as payment processing, fraud detection, IoT anomaly detection, security, alerting, real-time marketing, and recommendations. If your use case either includes data that is most valuable at the time it is created or requires a response (i.e. data collection and processing) at the time the data is generated, stream processing is right for you.

Consider when you swipe your credit card at the checkout line in your local grocery. Your expectation, or at least hope, is that the payment processing system will immediately confirm that you have not reported your credit card to be lost or stolen and the transaction will be authorized, with an allowance for the fact that the majority of the time the data is in transit across a network and back. This requires stream processing. Now, imagine you are the last customer in the store and you swipe your card, but the payment system is built on a micro-batch processing system with batches set to process when there are 10 new transactions. You would wait longer than necessary.