See Hazelcast in Action

Sign up for a personalized demo.

What is Stream Processing?

Stream processing is the practice of taking action on a series of data at the time the data is created. Historically, data practitioners used “real-time processing” to talk generally about data processed as frequently as necessary for a particular use case. But with the advent and adoption of stream processing technologies and frameworks, coupled with decreasing prices for RAM, stream processing is used more specifically.

Stream processing often entails multiple tasks on the incoming series of data (the “data stream”), which can be performed serially, in parallel, or both. This workflow is referred to as a stream processing pipeline, which includes the generation of the streaming data, the processing of the data, and the delivery of the data to a final location.

Our HazelVision video series explains the technical topics you want to explore.

Watch as we walk through sample stream processing code.

Actions that stream processing takes on data include aggregations (e.g., calculations such as sum, mean, and standard deviation), analytics (e.g., predicting a future event based on patterns in the data), transformations (e.g., changing a number into a date format), enrichment (e.g., combining the data point with other data sources to create more context and meaning), and ingestion (e.g., inserting the data into a database).

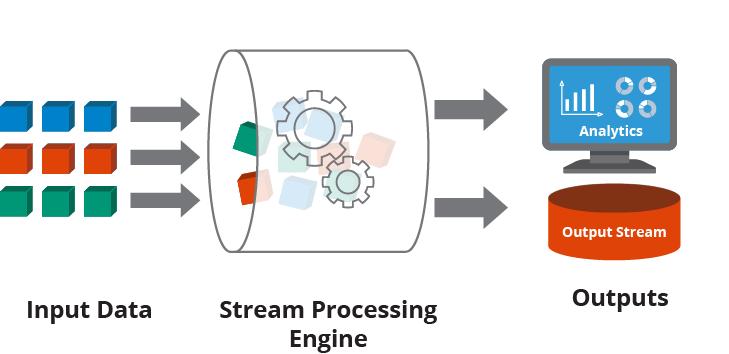

Stream processing allows applications to respond to new data events at the moment they occur. In this simplified example, the stream processing engine processes the input data pipeline in real-time. The output data is delivered to a streaming analytics application and added to the output stream.

Stream Processing Architectures

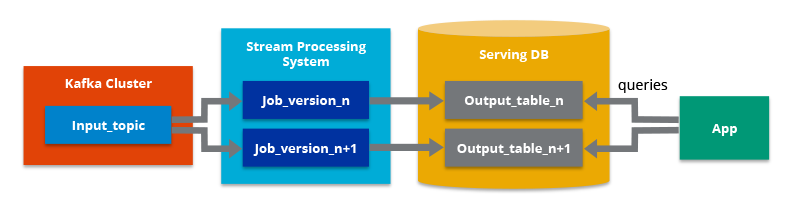

Kappa Architecture

Kappa Architecture simplifies data processing by combining batch and real-time analytics into one. Data enters a central data queue such as Apache Kafka and is converted into a format that can be directly fed into an analytics database. By removing complexity and increasing efficiency, this unified method enables you to analyze data more quickly and instantly obtain deeper insights.

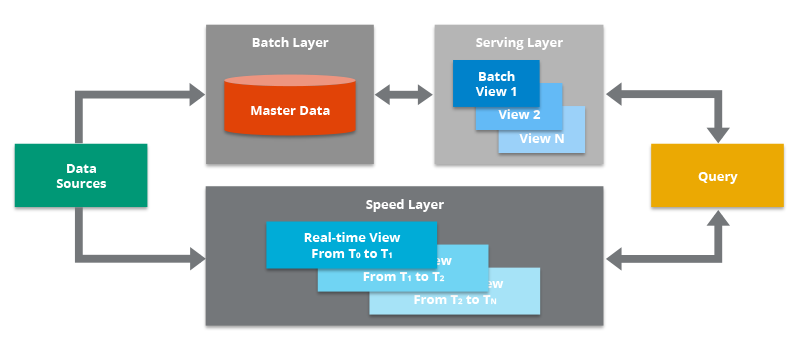

Lambda Architecture

Lambda Architecture is a data processing methodology that blends real-time stream processing for instant insights with conventional batch processing for historical analysis. With this combination, enterprises may see all aspects of their data, from quick changes to long-term patterns. The fundamental elements of Lambda Architecture are a batch pipeline for historical data analysis, a streaming pipeline for real-time data acquisition and processing, and a serving layer for low-latency query facilitation.

Stream Processing vs. Batch Processing

Historically, data was typically processed in batches based on a schedule or predefined threshold (e.g., every night at 1 am, every hundred rows, or every time the volume reached two megabytes). But the pace of data has accelerated, and volumes have ballooned. There are many use cases for which batch processing doesn’t cut it.

Stream processing has become a must-have for modern applications. Enterprises have turned to technologies that respond to data when created for various use cases and applications, examples of which we’ll cover below.

Stream processing allows applications to respond to new data events at the moment they occur. Rather than grouping data and collecting it at some predetermined interval, batch processing and stream processing applications collect and process data immediately as they are generated.

How Does It Work?

Stream processing is often applied to data generated as a series of events, such as data from IoT sensors, payment processing systems, and server and application logs. Common paradigms include publisher/subscriber (commonly referred to as pub/sub) and source/sink. Data and events are generated by a publisher or source and delivered to a stream processing application, where the data may be augmented, tested against fraud detection algorithms, or otherwise transformed before the application sends the result to a subscriber or sink. On the technical side, common sources and sinks include Apache Kafka®, big data repositories such as Hadoop, TCP sockets, and in-memory data grids.

Stream Processing in Action

Stream processing use cases typically involve event data that is generated by some action and upon which some action should immediately occur. Everyday use cases for real-time stream processing include:

- Real-time fraud and anomaly detection. One of the world’s largest credit card providers has been able to reduce its fraud write-downs by $800M per year, thanks to fraud and anomaly detection powered by stream processing. Credit card processing delays are detrimental to the experience of both the end customer and the store attempting to process the credit card (and any other customers in line). Historically, credit card providers performed their time-consuming fraud detection processes in a batch manner post-transaction. With stream processing, as soon as you swipe your card, they can run more thorough algorithms to recognize and block fraudulent charges and trigger alerts for anomalous charges that merit additional inspection without making their (non-fraudulent) customers wait.

- Internet of Things (IoT) edge analytics. Companies in manufacturing, oil and gas, transportation, and those architecting smart cities and smart buildings leverage stream processing to keep up with data from billions of “things.” An example of IoT data analysis is detecting anomalies in manufacturing that indicate problems need to be fixed to improve operations and increase yields. With real-time stream processing, a manufacturer may recognize that a production line is turning out too many anomalies as it is occurring (as opposed to finding an entire bad batch after the day’s shift). They can recognize huge savings and prevent massive waste by pausing the line for immediate repairs.

- Real-time personalization, marketing, and advertising. With real-time stream processing, companies can deliver personalized, contextual customer experiences. This can include a discount for something you added to a cart on a website but didn’t immediately purchase, a recommendation to connect with a just-registered friend on a social media site, or an advertisement for a product similar to the one you just viewed.

The Future of Stream Processing

As the demand for real-time insights intensifies, stream processing emerges as a critical tool for organizations navigating the data-driven landscape. Its ability to analyze information as it arrives, coupled with advancements in AI and machine learning, promises significant improvements in data-driven decision-making. Stream processing is not simply a fleeting trend but a foundational technology shaping the future of how we understand and utilize data.