What Is Lambda Architecture?

The Lambda Architecture is a deployment model for data processing that organizations use to combine a traditional batch pipeline with a fast real-time stream pipeline for data access. It is a common architecture model in IT and development organizations’ toolkits as businesses strive to become more data-driven and event-driven in the face of massive volumes of rapidly generated data, often referred to as “big data.”

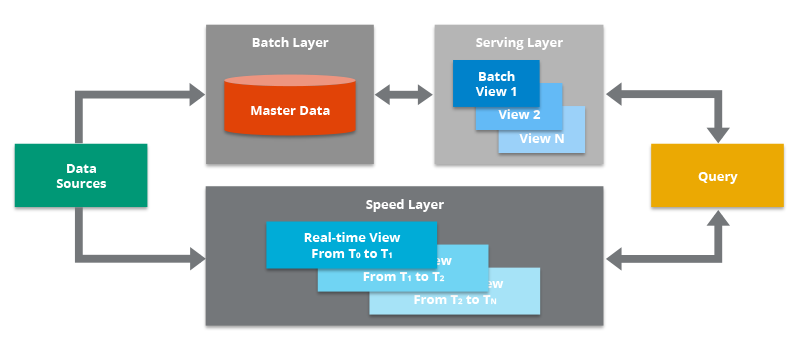

In the diagram above, you can see the main components of the Lambda Architecture:

Data Sources. Data can be obtained from a variety of sources, which can then be included in the Lambda Architecture for analysis. This component is oftentimes a streaming source like Apache Kafka, which is not the original data source per se, but is an intermediary store that can hold data in order to serve both the batch layer and the speed layer of the Lambda Architecture. The data is delivered simultaneously to both the batch layer and the speed layer to enable a parallel indexing effort.

Batch Layer. This component saves all data coming into the system as batch views in preparation for indexing. The input data is saved in a model that looks like a series of changes/updates that were made to a system of record, similar to the output of a change data capture (CDC) system. Oftentimes this is simply a file in the comma-separated values (CSV) format. The data is treated as immutable and append-only to ensure a trusted historical record of all incoming data. A technology like Apache Hadoop is often used as a system for ingesting the data as well as storing the data in a cost-effective way.

Serving Layer. This layer incrementally indexes the latest batch views to make it queryable by end users. This layer can also reindex all data to fix a coding bug or to create different indexes for different use cases. The key requirement in the serving layer is that the processing is done in an extremely parallelized way to minimize the time to index the data set. While an indexing job is run, newly arriving data will be queued up for indexing in the next indexing job.

Speed Layer. This layer complements the serving layer by indexing the most recently added data not yet fully indexed by the serving layer. This includes the data that the serving layer is currently indexing as well as new data that arrived after the current indexing job started. Since there is an expected lag between the time the latest data was added to the system and the time the latest data is available for querying (due to the time it takes to perform the batch indexing work), it is up to the speed layer to index the latest data to narrow this gap.

This layer typically leverages stream processing software to index the incoming data in near real-time to minimize the latency of getting the data available for querying. When the Lambda Architecture was first introduced, Apache Storm was a leading stream processing engine used in deployments, but other technologies have since gained more popularity as candidates for this component (like Hazelcast Jet, Apache Flink, and Apache Spark Streaming).

Query. This component is responsible for submitting end user queries to both the serving layer and the speed layer and consolidating the results. This gives end users a complete query on all data, including the most recently added data, to provide a near real-time analytics system.

How Does the Lambda Architecture Work?

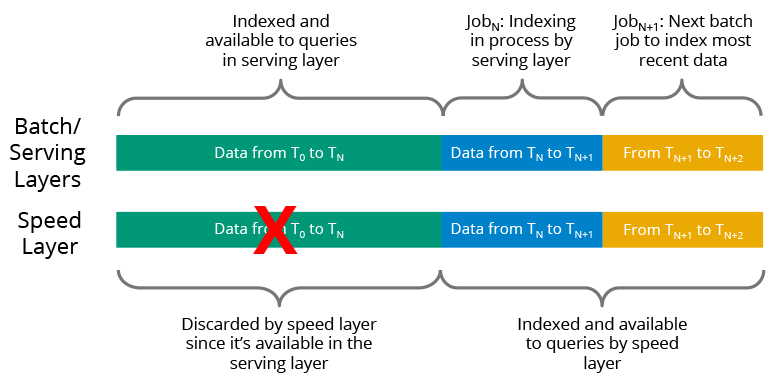

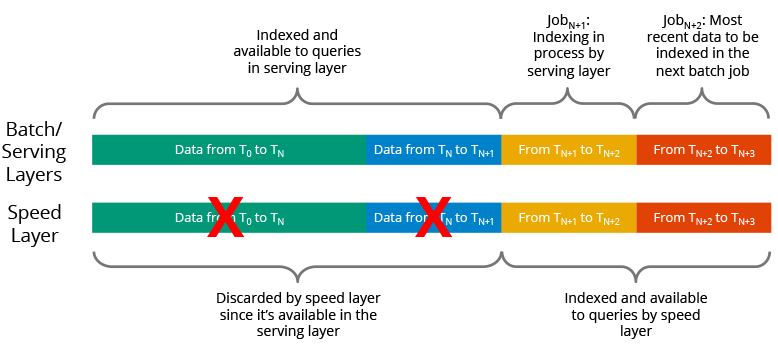

The batch/serving layers continue to index incoming data in batches. Since the batch indexing takes time, the speed layer complements the batch/serving layers by indexing all the new, unindexed data in near real-time. This gives you a large and consistent view of data in the batch/serving layers that can be recreated at any time, along with a smaller index that contains the most recent data.

Once a batch indexing job completes, the newly batch-indexed data is available for querying, so the speed layer’s copy of the same data/indexes is no longer needed and is therefore deleted from the speed layer. The serving layer then begins indexing the latest data in the system that had not yet been indexed by this layer, which has already been indexed by the speed layer (so it is available for querying at the speed layer). This ongoing hand-off between the speed layer and the batch/serving layers ensures that all data is ready for querying and that the latency for data availability is low.

What Are the Benefits of the Lambda Architecture?

The Lambda Architecture attempts to balance concerns around latency, data consistency, scalability, fault tolerance, and human fault tolerance. Let’s look at each of these elements.

Latency. Raw data is indexed in the serving layer so that end users can query and analyze all historical data. Since batch indexing takes a bit of time, there tends to be a relatively large time window of data that is temporarily not available to end users for analysis. The speed layer uses stream processing technologies to immediately index recent data that is currently not queryable in the batch/serving layers, thus narrowing the time window of unanalyzable data. This helps to reduce the latency (i.e., the wait time for making data available for analysis) that is inherent in the batch/serving layers.

Data consistency. One key idea behind the Lambda Architecture is that it eliminates the risk of data inconsistency that is often seen in distributed systems. In a distributed database where data might not be delivered to all replicas due to node or network failures, there is a chance for inconsistent data. In other words, one copy of the data might reflect the up-to-date value, but another copy might still have the previous value. In the Lambda Architecture, since the data is processed sequentially (and not in parallel with overlap, which may be the case for operations on a distributed database), the indexing process can ensure the data reflects the latest state in both the batch and speed layers.

Scalability. The Lambda Architecture does not specify the exact technologies to use, but is based on distributed, scale-out technologies that can be expanded by simply adding more nodes. This can be done at the data source, in the batch layer, in the serving layer, and in the speed layer. This lets you use the Lambda Architecture no matter how much data you need to process.

Fault tolerance. As above, the Lambda Architecture is based on distributed systems that support fault tolerance, so should a hardware failure occur, other nodes are available to continue the workload. In addition, since all data is stored in the batch layer, any failures during indexing either in the serving layer or the speed layer can be overcome by simply rerunning the indexing job at the batch/serving layers, and letting the speed layer continue indexing the most recent data.

Human fault tolerance. Since raw data is saved for indexing, it acts as a system of record for your analyzable data, and all indexes can be recreated from this data set. This means that if there are any bugs in the indexing code or any omissions, the code can be updated and then rerun to reindex all data.

The Lambda Architecture has sometimes been criticized as being overly complex. Each pipeline requires its own code base, and the code bases must be kept in sync to ensure consistent, accurate results when queries touch both pipelines.

What Is the Difference Between Lambda Architecture and Kappa Architecture?

The Kappa Architecture is another design pattern that one may come across in exploring the Lambda Architecture. Kappa Architecture is similar to Lambda Architecture without a separate set of technologies for the batch pipeline. Rather, all data is simply routed through a stream processing pipeline. All data is stored in a messaging bus (like Apache Kafka), and when reindexing is required, the data is re-read from that source.

This is a simplified approach in that it only requires one code base, but in organizations with historical data in traditional batch systems, they must decide whether the transition to a streaming-only environment is worth the overhead of the initial change of platforms. Also, message buses are not as efficient for extremely large time windows of data versus data platforms that are cost-effective for larger data sets. This means you cannot always store your entire data history in a Kappa Architecture. However, technological innovations are breaking down this limitation so that much larger data sets can be stored in message buses as on-demand streams, to enable the Kappa Architecture to be more universally adopted. Also, some stream processing technologies (like Hazelcast Jet) support batch processing paradigms as well, so you can use large-scale data repositories as a source alongside a streaming repository. This lets you process extremely large data sets in a cost-effective way while also gaining the simplicity of using only one processing engine.