Real-Time Stream Processing

Real-time Stream Processing is the process of taking action on data at the time the data is generated or published.

Historically, real-time processing simply meant data was “processed as frequently as necessary for a particular use case.” But as stream processing technologies and frameworks are becoming ubiquitous, real-time stream processing now means what it says. Processing times can be measured in microseconds (one millionth of a second) rather than in hours or days.

Real-Time vs. Streaming

The term “real-time” has a long history in the data world, which can cause some confusion. Real-time data typically refers to data that is immediately available without delay from a source system or process for some follow-up action. For example, day traders may require real-time stock ticker data on which they run algorithms (or processes) in order to trigger a buy, no-buy, or sell action.

Real-time analytics can refer to either the immediate analysis of data on the edge of the network as it is generated, or analyses that immediately return results. The stock ticker data example above could serve both purposes. The day trader wants to analyze the data in real time as it is generated. And their algorithms should return results fast enough—in real time—for the buy/no-buy/sell decision to be executed at a profit or the lowest possible loss.

Streaming data is data that is continuously generated and delivered rather than processed in batches or micro-batches. This is often referred to as “event data,” since each data point describes something that occurred at a given time. Again, stock ticker data is a good example. If day traders collect and process a batch of data every 15 minutes, or when they complete some other interval or cross some threshold, they may miss many opportunities to buy and sell for a profit.

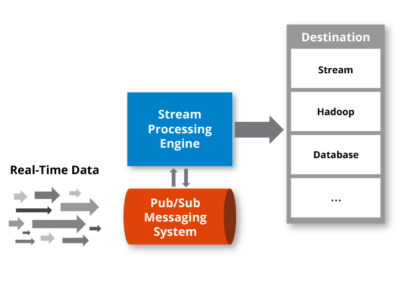

The terms “real-time” and “stream” converge in “real-time stream processing” to describe streams of real-time data that are gathered and processed as they are generated. There may be multiple non-trivial processes in a real-time stream processing data pipeline. For example, real-time streaming data could be augmented, executed against by multiple algorithms, and aggregated with other data points in a single real-time stream processing data pipeline.

Real-Time Data Streaming Tools and Technologies

Traditional data tools were built around disk-based processing and batch data pipelines, making them insufficient for streaming real-time data as required in the use cases above. While a variety of real-time data streaming tools and technologies have entered the market in the last few years, there are some commonalities.

- In-memory processing: RAM is critical to stream processing. Disk-based technologies simply aren’t fast enough to process streams in real-time, even in massively parallel architectures where the resources of many computers are joined together. The decreasing price for RAM has made in-memory computing platforms the de facto standard for building applications to support real-time data streaming.

- Open source: The open source community has been responsible for laying the groundwork for many real-time data streaming tools and technologies. Technology providers will often build features and components to make real-time stream processing technologies enterprise-ready, but there is quite often an open source element underneath.

- Cloud: Many of the applications that generate data for real-time stream processing are cloud-based. And many businesses are shifting their IT budgets to the cloud. Because of this convergence, real-time data streaming technologies are often cloud-native.