What Is Digital Transformation?

Digital transformation is the process of upgrading and extending an organization’s business and IT practices to get more value from data. That extra value can manifest as faster operations, new revenue opportunities, reduced cost, and/or reduced risk, and ultimately as greater competitive advantage. Digital transformation is an umbrella term that simply describes many activities that rely on the use of newer data-centric technologies that have emerged in the last decade or so, during a time when innovation in that area advanced faster than ever before.

New technologies are a critical part of a digital transformation initiative, to supplement existing legacy systems, to replace existing systems, or to automate previously manual processes. In many cases, digital transformation includes the identification and exposure of data sources that the organization previously left untapped.

While digital transformation is largely based on technology upgrade and modernization, it should be viewed as a business strategy first, with the business objectives identified up front. It requires a well thought out plan for overhauling operating procedures, and not simply incremental changes that provide quick but minor gains. For example, a business might pursue higher goals on business issues such as maximizing upsell opportunities, reducing customer churn, and optimizing flows in their supply chain. After diligent investigation, it might conclude that the use of machine learning and artificial intelligence (ML/AI) technologies is the most effective and efficient approach. This type of initiative requires a plan that at a minimum identifies the available resources, the gaps and the course of action to fill those gaps, and the process to execute and maintain the initiative.

Examples of Digital Transformation Approaches

There are many approaches to a digital transformation strategy, some of which are described below. These approaches are not mutually exclusive, as enterprises use any combination of approaches as part of their overall strategy.

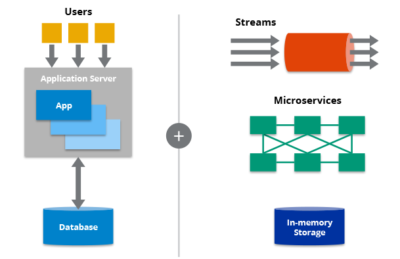

Architectural modernization. This is adding newer technologies to complement or even supplant existing technologies that are unable to keep up with growing workloads and other business changes. NoSQL databases, distributed in-memory systems, and stream processing engines are examples of enabling technologies in this approach.

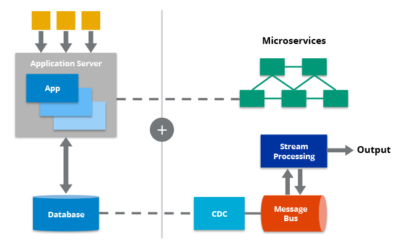

Application modernization. Related to architectural modernization, this approach entails extending or overhauling existing applications to add new business functionality as well as to extend technical functionality. For example, refactoring a monolithic application into a microservices architecture can result in a higher level of modularity that simplifies the addition of new capabilities. This approach also typically involves the use of newer technologies to support changes in the applications, especially streaming and stream processing technologies to enable an event-driven architecture. Also, the use of change data capture (CDC) tools on existing application/database infrastructures is an emerging technical use case for modernizing applications.

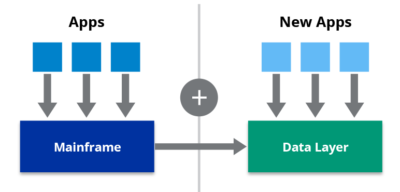

Mainframe optimization. Despite the ongoing innovations in inexpensive hardware, mainframe computers are still widely used. For many mainframe users, it doesn’t make sense to replace the proven and functional hardware, but at the same time, they look for more cost-effective use of mainframes. The notion of mainframe optimization entails adding new technologies to the infrastructure to move lower priority workloads onto inexpensive hardware, while retaining the most important workloads on the mainframe. This strategy reduces costs based on MIPS- or MSU-based pricing on mainframes.

Automation via machine learning and artificial intelligence. Analyzing large volumes of data to identify patterns and trends via machine learning techniques has been a great approach for reducing costs via automation. This approach not only allows much greater scale, but also helps find hidden insights that are otherwise difficult to find by humans alone. Enterprises are adding ML/AI disciplines to their businesses, which requires a deep investigation on the right technologies to use to support large-scale data storage, ongoing analysis and experimentation, and high-speed and scalable deployment.

Cloud migration. As enterprises look to gain agility to respond faster to changing business conditions and implement new strategies, they turn to greater use of cloud technologies as part of their IT strategy. A major inhibitor of business agility from a technology standpoint is the delay in procuring and provisioning hardware resources. Cloud implementations in both private and public environments help application development teams get their resources much quicker, and thus lets them progress with their jobs more seamlessly. An investment in cloud-native technologies is essential in achieving that objective. Companies like IBM are heavily investing in cloud-native infrastructures to help businesses migrate to cloud environments to enable greater agility.