You Can’t Do Real-Time with Your Database – It Does Not Compute

It does not make sense to drive your real-time operations with your database. It does not compute, and by that, I mean your database lacks powerful computational capabilities (see my earlier blog on how databases are really just about reads and writes). And thus, it cannot really do much with your real-time data other than store it and retrieve it when asked.

A 2022 report on the data-driven enterprise by McKinsey & Company asserts the real-time processing and delivery of data as a key characteristic of companies that gain the most value from data-focused initiatives. It also called out the “high computational demands” of real-time processing as one inhibitor to the implementation of real-time use cases. To address those demands, businesses must choose the right technologies that provide the computational power to enable real-time advantages. The right technologies include not only hardware and cloud instances, but also newer classes of software that are designed for real-time applications.

Near Real-Time? Good Enough Real-Time?

You might argue that your database already gives you real-time capabilities, especially for end-user analytics. You take your real-time data feeds, transform them into an optimized format for end-user querying, and then write them to a database. When end users run queries, they get nearly instantaneous results, and you consider this sufficient for your real-time operations. That’s good enough, right?

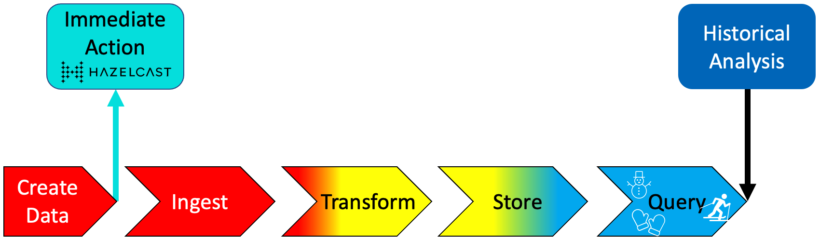

It’s good, but not good enough for a true real-time enterprise. The limitation here is that the focus is getting the queries to run as quickly as possible. Database vendors tell you that’s all you need today, which is the same thing they told you in the past. The problem is that there still is a dependency on writing to the database first, along with the slow human intervention in the critical path for identifying actionable insights. This store-then-analyze paradigm precludes the ability to serve real-time use cases that instantly drive the “action” in “actionable insights.” Databases simply don’t give you a framework for writing applications that handle the computationally intensive work inherent in real-time data processing systems. Therefore, you miss the advantage of immediately responding to business activities and events.

Take Immediate Action

Real-time enterprises place heavy emphasis on recognizing time-sensitive opportunities and responding to them right now. They recognize that data has much more value “in the moment.” Delays result in lost value, especially when storing data that captures specific business events for later analysis. But to be clear, these enterprises also recognize the collective value of historical data, so they don’t see historical data analysis as a bad thing, but rather, as one component of their data strategy. They gain their significant advantage by responding to data when it’s most valuable, well before it gets relegated to the “historical” bin.

Think about real-time use cases that require immediate action. Fraud detection is a popular example that most of us understand because we know we want to take action, i.e., stop the fraud, as the transaction is taking place. We’d rather flag a transaction as potentially fraudulent now, than to figure it out later and potentially even miss it. Fraud detection is a specific example of the broader practice of anomaly detection, where an outlier event is worthy of scrutiny because it may represent some undesirable activity or status that needs immediate investigation. Patient monitoring, predictive maintenance, and quality assurance testing are just a few examples that leverage anomaly detection through data analysis.

Another popular example of a real-time use case is real-time recommendations, where you make highly relevant recommendations to your customers as they are interacting with your business, not after they’ve left. Up-sell and cross-sell opportunities are especially valuable to customers while they are shopping and are especially effective when the promotions are related to the items the shopper is already prepared to purchase. You cannot accurately predict the night before what customers will put into their shopping carts, so you need real-time.

Also, many real-time applications can leverage machine learning algorithms, and if you are asking your computers to do the fast thinking for you, wouldn’t it make sense for them to do the thinking now versus later? By sending real-time data through machine learning algorithms, you can get instant feedback on hidden insights in your data such as unusual characteristics of a given transaction, customer interactions that suggest future churn, subtle signs of impending equipment failure, etc. These are just examples of insights that you want to uncover as soon as possible so you can get alerts or take automated action so that you don’t miss the opportunity (or the potential failure).

Stream Processing on Apache Kafka Enables Real-Time

If you are using Apache Kafka, Apache Pulsar, AWS Kinesis, or any message bus for storing event data, then you likely are taking advantage of real-time data. But if you are merely turning that real-time data (i.e., “data in motion”) into data at rest by first storing it in a database, then you are adding an unnecessary delay while also relying on a batch-oriented paradigm to process your data. Specifically, your database applications will have to continually and inefficiently poll your database to try to uncover the real-time actionable insights buried in your data. Anyone who has tried this type of continual polling knows that it is a very clunky way to analyze data, and it is not very real-time.

The alternative is to leverage a stream processing engine to read event data sets as they are created and then immediately identify the trends, patterns, or anomalies that are otherwise difficult to uncover in databases. Stream processing engines are designed to work with data as a flow, so you can write applications that take immediate action on data. Don’t rely on your database as the foundation of a real-time strategy—explore the technologies that were built explicitly for getting the most out of real-time data.

If the idea of leveraging stream processing to handle your real-time data sounds like a good idea, take a look at the Hazelcast Platform, a real-time stream processing platform that offers the simplicity and performance (among other advantages) you seek to gain a true real-time advantage. “How simple” and “how fast,” you ask? With our free offerings, you can see for yourself. Check out our software, our cloud-managed service, or even just contact us so we can discuss the implementation challenges you face and how we can help you overcome them.