What Is Streaming ETL?

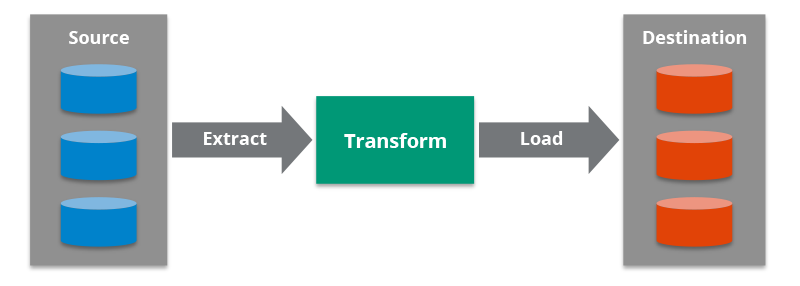

Streaming ETL (Extract, Transform, Load) is the processing and movement of real-time data from one place to another. ETL is short for the database functions extract, transform, and load.

How Does Traditional ETL Work?

First, a little background on traditional ETL:

Extract

Extract refers to collecting data from some data source. These data sources could be legacy business systems, traditional databases, IoT devices, sensor data, APIs, and so on.

Transform

Transform refers to any processes performed on that data. Once data has been extracted from the data source, the transform process converts the data into a format that can be used by different applications. During this process, the data may be cleansed and prepared for use in your business systems or processes.

Load

Load refers to sending the processed data to a destination, such as a database. In streaming ETL, this entire process occurs against streaming data in real time in a stream processing platform.

In some streaming technology circles, the originating system is called a source, and the destination is called a sink.

Batch ETL vs. Streaming ETL

In traditional data environments, ETL software extracted batches of data from a source system usually based on a schedule, transformed that data, then loaded it to a repository such as a data warehouse or database. This is the “batch ETL” model. However, many modern business environments cannot wait hours or days for applications to handle batches of data. They must respond to new data in real time as the data is generated.

These real-time applications require streaming ETL. Fraud detection, Internet of Things, edge computing, streaming analytics, and real-time payment processing are examples of applications that rely on streaming ETL.

One example of the use of streaming ETL is in a “360-degree customer view” use case, especially one that enhances real-time interactions between the business and the customer. In such a scenario, a customer might be using the business’ services (such as a cell phone or a streaming video service) and also searching on their website for support. All these interactions are sent in a streaming manner for the ETL engine to process and transform into an analyzable format. Often, the ETL processing will help to reveal insights about the customer that would otherwise not be obvious from the raw interaction data alone. For example, the interactions might suggest that the customer is comparison shopping and might be ready to churn. Should the customer call in for help, the call agent has immediate access to up-to-date information on what the customer was trying to do, and the agent can not only provide effective assistance but can also offer additional up-sell/cross-sell products and services that can benefit the customer.

A credit card fraud detection application is another simple example of streaming ETL in action. When you swipe your credit card, the transaction data is sent to, or extracted by, the fraud detection application. The application then joins the transaction data in a transform step with additional data about you and then applies fraud detection algorithms. Relevant information includes the time of your most recent transaction, whether you’ve recently purchased from this store, and how your purchase compares to others with similar buying habits. Finally, the application loads the resulting approval or denial to the credit card reader. Banks and credit card issuers reduce their fraud losses by hundreds of millions of dollars each year by leveraging streaming ETL to perform this process in realtime.

Real-time Streaming ETL Architecture

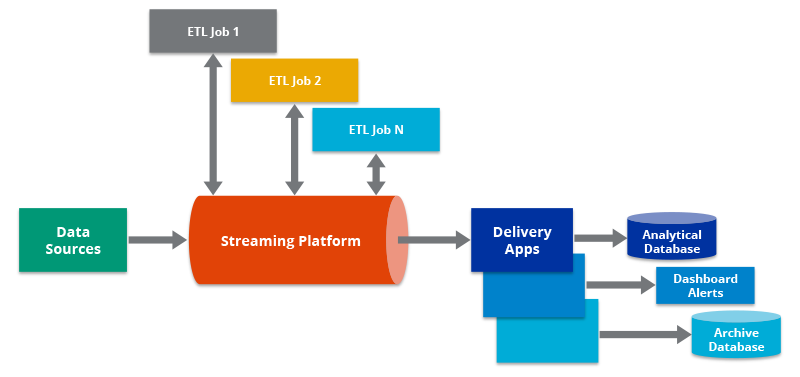

Streaming ETL may be referred to as real-time ETL. Conceptually, a streaming ETL architecture (or real-time ETL architecture) is fundamentally the same as a traditional ETL architecture. At the start, there is a data source that feeds into a system that processes and transforms data from that source, and then the output is delivered to a destination. When displayed in diagram form, the architecture often has the source to the left, the ETL engine in the middle, and the destination(s) on the right.

In a traditional ETL architecture, the data source is a system or application. In the middle, you have a purpose-built ETL tool such as Informatica PowerCenter or Talend that is responsible for extracting data from the source and processing it before passing it off to a destination, usually a data warehouse or some other large data repository. The repository then becomes the center of your data universe, feeding pieces of data to individual applications as necessary, often on a schedule. (Think about nightly sales reports that show up in your inbox in the morning.)

With real-time streaming ETL architectures, you still have the sources on the left. The sources feed data to a stream processing platform. This platform serves as the backbone to streaming ETL applications, but also for many other types of streaming applications and processes. The streaming ETL application may extract data from the source, or the source may publish data directly to the streaming ETL application. When a streaming ETL process completes, it may pass data to the right to a destination (potentially a data warehouse). Or it may send a result back to the original source on the left. In addition, it can concurrently deliver data to other applications and repositories.

The data warehouse is still a critical piece of technology, but the stream processing platform and streaming ETL provide the opportunity to rethink the flow of data for new (and many existing) applications. Streaming ETL data pipelines may occur alongside traditional batch ETL pipelines, depending on the use case.

Event-Driven ETL

“Event-driven ETL” is synonymous with “streaming ETL.” Events and streams are very closely related, in that a stream typically refers to a series of event data. The data processed in an event-driven ETL pipeline could be sensor readings, customer interaction metadata, or server/application log data. “Event-driven ETL” is often used in the context of event-driven architectures.

Streaming ETL Tools

A number of companies have built product suites around ETL over the decades. Most of these tools and suites were built for the batch world. While technology providers are trying to catch their products up to the world of streaming data, most of the products simply lack the capabilities necessary for streaming ETL.

There are newer technologies like Hazelcast that have come to the market that build on in-memory computing platforms and the cloud to support streaming ETL. In-memory helps to drive the speed that is required in modern streaming architectures, and the cloud boosts agility to adapt quickly to changing business demands. Stream processing engines, which provide a framework for taking action on streaming data, are useful for streaming ETL. In this context, there are three key trends at play:

- IT budget is shifting to the cloud.

- The number of streaming data sources is continuously increasing.

- Users are demanding access to the most up-to-date data.

As these trends continue, we will likely see more use of streaming ETL tools in the coming years.