What is a Distributed Transaction?

A distributed transaction is a set of operations on data that is performed across two or more data repositories (especially databases). It is typically coordinated across separate nodes connected by a network, but may also span multiple databases on a single server.

There are two possible outcomes: 1) all operations successfully complete, or 2) none of the operations are performed at all due to a failure somewhere in the system. In the latter case, if some work was completed prior to the failure, that work will be reversed to ensure no net work was done. This type of operation is in compliance with the “ACID” (atomicity-consistency-isolation-durability) principles of databases that ensure data integrity. ACID is most commonly associated with transactions on a single database server, but distributed transactions extend that guarantee across multiple databases.

The operation known as a “two-phase commit” (2PC) is a form of a distributed transaction. “XA transactions” are transactions using the XA protocol, which is one implementation of a two-phase commit operation.

How Do Distributed Transactions Work?

Distributed transactions have the same processing completion requirements as regular database transactions, but they must be managed across multiple resources, making them more challenging to implement for database developers. The multiple resources add more points of failure, such as the separate software systems that run the resources (e.g., the database software), the extra hardware servers, and network failures. This makes distributed transactions susceptible to failures, which is why safeguards must be put in place to retain data integrity.

For a distributed transaction to occur, transaction managers coordinate the resources (either multiple databases or multiple nodes of a single database). The transaction manager can be one of the data repositories that will be updated as part of the transaction, or it can be a completely independent separate resource that is only responsible for coordination. The transaction manager decides whether to commit a successful transaction or rollback an unsuccessful transaction, the latter of which leaves the database unchanged.

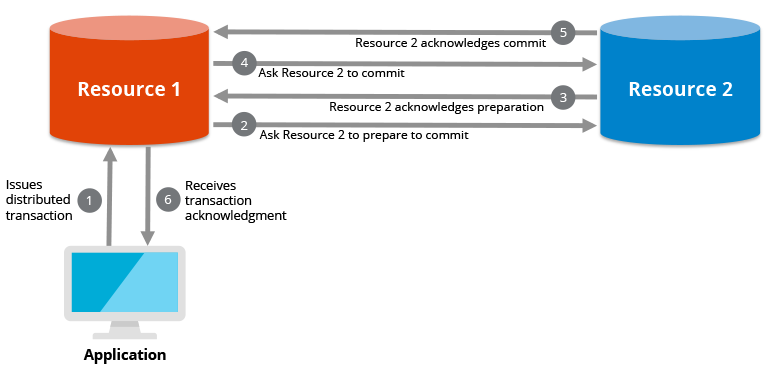

First, an application requests the distributed transaction to the transaction manager. The transaction manager then branches to each resource, which will have its own “resource manager” to help it participate in distributed transactions. Distributed transactions are often done in two phases to safeguard against partial updates that might occur when a failure is encountered. The first phase involves acknowledging an intent to commit, or a “prepare-to-commit” phase. After all resources acknowledge, they are then asked to run a final commit, and then the transaction is completed.

We can examine a basic example of what happens when a failure occurs during a distributed transaction. Let’s say one or more of the resources become unavailable during the prepare-to-commit phase. When the request times out, the transaction manager tells each resource to delete the prepare-to-commit status, and all data will be reset to its original state. If instead, any of the resources become unavailable during the commit phase, then the transaction manager will tell the other resources that successfully committed their portion of the transaction to undo or “rollback” that transaction, and once again, the data is back to its original state. It is then up to the application to retry the transaction to make sure it gets completed.

Why Do You Need Distributed Transactions?

Distributed transactions are necessary when you need to quickly update related data that is spread across multiple databases. For example, if you have multiple systems that track customer information and you need to make a universal update (like updating the mailing address) across all records, a distributed transaction will ensure that all records get updated. And if a failure occurs, the data is reset to its original state, and it is up to the originating application to resubmit the transaction.

Distributed Transactions for Streaming Data

Distributed transactions are especially critical today in data streaming environments because of the volume of incoming data. Even a short-term failure in one of the resources can represent a potentially large amount of lost data. Sophisticated stream processing engines support “exactly-once” processing in which a distributed transaction covers the reading of data from a data source, the processing, and the writing of data to a target destination (the “data sink”). The “exactly-once” term refers to the fact that every data point is processed, and there is no loss and no duplication. (Contrast this to “at-most-once” which allows data loss, and “at-least-once” which allows duplication.) In an exactly-once streaming architecture, the repositories for the data source and the data sink must have capabilities to support the exactly-once guarantee. In other words, there must be functionality in those repositories that lets the stream processing engine fully recover from failure, which does not necessarily have to be a true transaction manager, but delivers a similar end result.

Hazelcast Jet is an example of a stream processing engine that will enable exactly-once processing with sources and sinks that do not inherently have the capability to support distributed transactions. This is done by managing the entire state of each data point and to re-read, re-process, and/or re-write it if the data point in question encounters a failure. This built-in logic allows more types of data repositories (such as Apache Kafka and JMS) to be used as either sources or sinks in business-critical streaming applications.

When Distributed Transactions Are Not Needed

In some environments, distributed transactions are not necessary, and instead, extra auditing activities are put in place to ensure data integrity when the speed of the transaction is not an issue. The transfer of money across banks is a good example. Each bank that participates in the money transfer tracks the status of the transaction, and when a failure is detected, the partial state is corrected. This process works well without distributed transactions because the transfer does not have to happen in (near) real time. Distributed transactions are typically critical in situations where the complete update must be done immediately.