What Is Distributed Computing?

Distributed computing (or distributed processing) is the technique of linking together multiple computer servers over a network into a cluster, to share data and to coordinate processing power. Such a cluster is referred to as a “distributed system.” Distributed computing offers advantages in scalability (through a “scale-out architecture”), performance (via parallelism), resilience (via redundancy), and cost-effectiveness (through the use of low-cost, commodity hardware).

As data volumes have exploded and application performance demands have increased, distributed computing has become extremely common in database and application design. This is why it is especially valuable for scaling so that as data volumes grow, that extra load can be handled by simply adding more hardware to the system. Contrast this to traditional “big iron” environments consisting of powerful computer servers, in which load growth must be handled by upgrading and replacing the hardware.

Distributed Computing in Cloud Computing

The growth of cloud computing options and vendors has made distributed computing even more accessible. Although cloud computing instances themselves do not automatically enable distributed computing, there are many different types of distributed computing software that run in the cloud to take advantage of the quickly available computing resources.

Previously, organizations relied on database administrators (DBAs) or technology vendors to link computing resources across networks within and across data centers to be able to share resources. Now, the leading cloud vendors make it easier to add servers to a cluster for additional storage capacity or computing performance.

With the ease and speed in which new computing resources can be provisioned, distributed computing enables greater levels of agility when handling growing workloads. This enables “elasticity,” in which a cluster of computers can be expanded or contracted easily depending on the immediate workload requirements.

Key Advantages

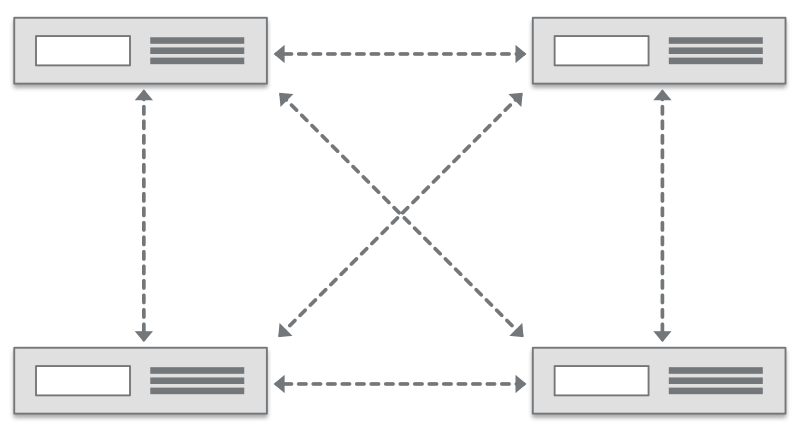

Distributed computing makes all computers in the cluster work together as if they were one computer. While there is some complexity in this multi-computer model, there are greater benefits around:

- Scalability. Distributed computing clusters are easy to scale through a “scale-out architecture” in which higher loads can be handled by simply adding new hardware (versus replacing existing hardware).

- Performance. Through parallelism in which each computer in the cluster simultaneously handles a subset of an overall task, the cluster can achieve high levels of performance through a divide-and-conquer approach.

- Resilience. Distributed computing clusters typically copy or “replicate” data across all computer servers to ensure there is no single point of failure. Should a computer fail, copies of the data on that computer are stored elsewhere so that no data is lost.

- Cost-effectiveness. Distributed computing typically leverages low-cost, commodity hardware, making initial deployments as well as cluster expansions very economical.