Hazelcast Resilient to Kubernetes Node and Zone Failures

Author’s note: The blog updates the original post written in 2019.

Data is valuable. Or I should write some data that is valuable. You may think that if the data is important to you, you must store it in a persistent volume, like a database or filesystem. This sentence is obviously true. However, there are many use cases where you don’t want to sacrifice the benefits of in-memory data stores. After all, no persistent database provides fast data access or allows us to combine data entries with high flexibility. Then, how to keep your in-memory data safe? That is what I’m going to present in this blog post.

High Availability

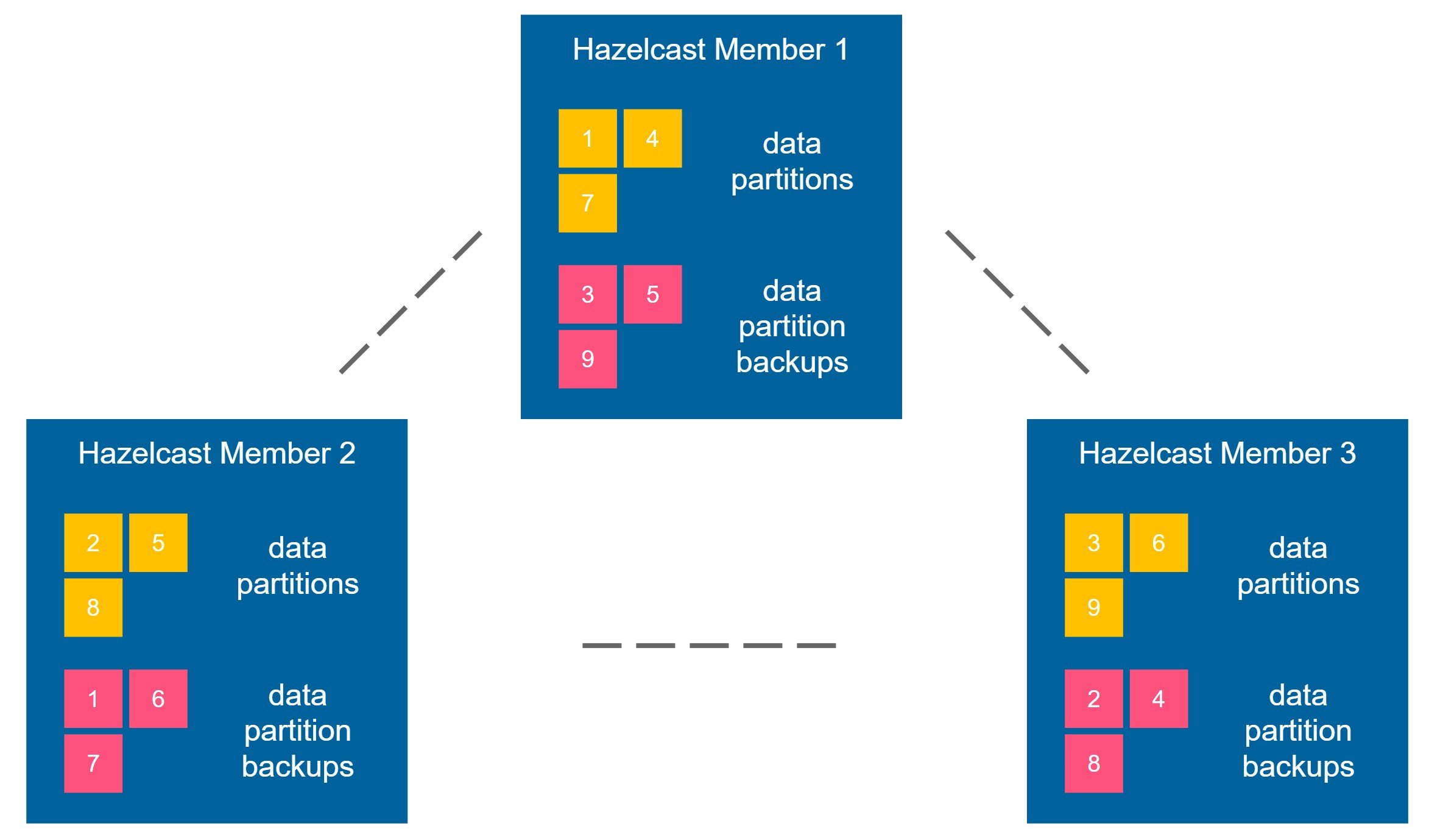

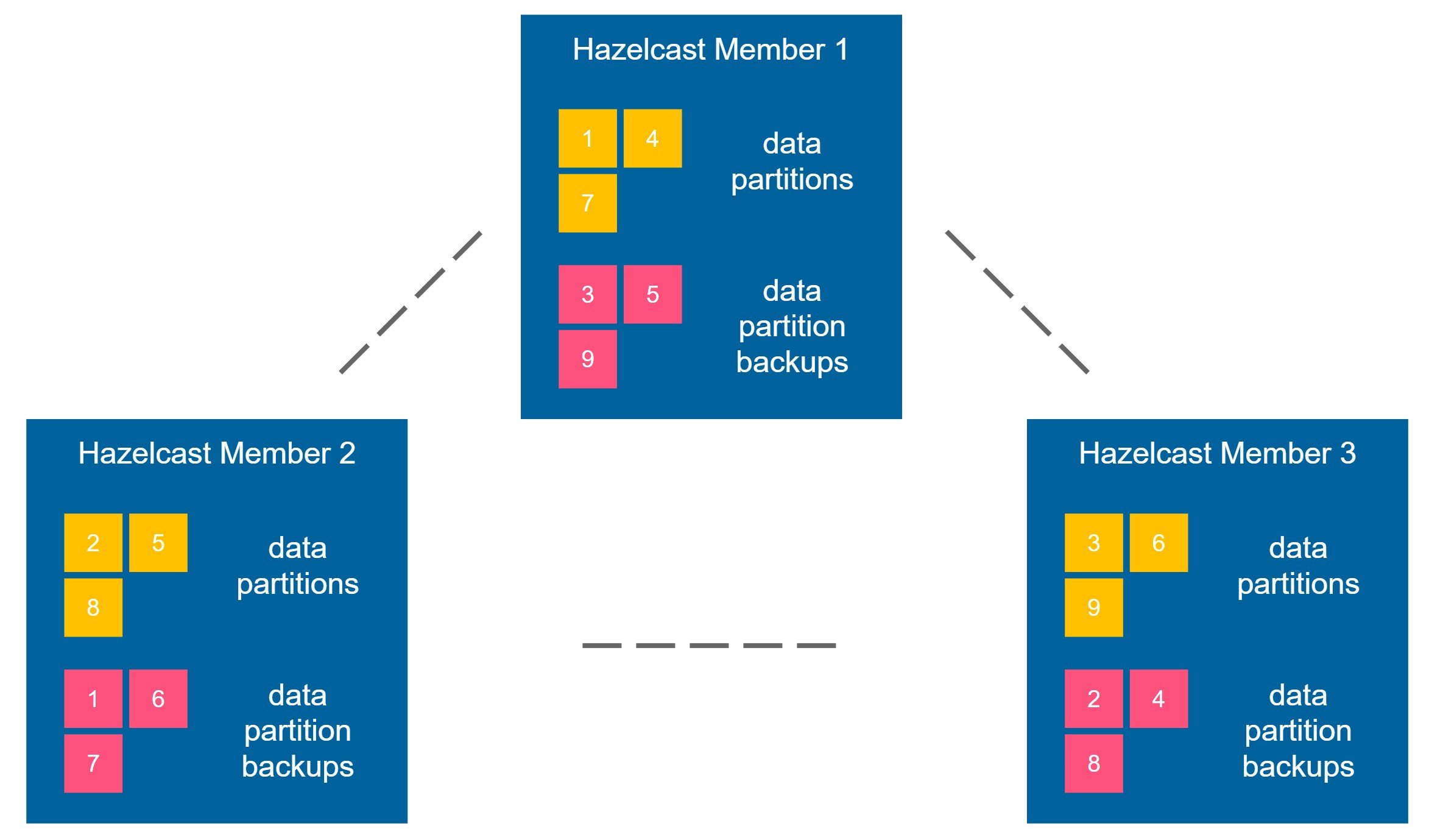

Hazelcast is distributed and highly available by nature. It’s achieved by keeping the data partition backup always on another Hazelcast member. For example, let’s look at the diagram below.

Imagine you put some data into the Hazelcast cluster, for example, a key-value entry (“foo”, “bar”). It is placed into data partition 1, and this partition is situated in member 1. Now, Hazelcast guarantees that the backup of any partition is kept in a different member. So, in our example, the backup of partition 1 could be placed in member 2 or 3 (but never in member 1). Backups are also propagated synchronously, so strict consistency is preserved.

Imagine that member 1 crashes. What happens next is that the Hazelcast cluster detects it, promotes the backup data, and creates a new backup. This way, you can always be sure that if any of the Hazelcast members crashes, you’ll never lose any data. That is what I call “high availability by nature.”

We can increase the backup-count property and propagate the backup data synchronously to multiple members simultaneously. However, the performance would suffer. In the corner case scenario, we could have backup-count equal to number of members, and even if all members except for one crash, the data is not lost. Such an approach, however, would not only be prolonged (because we have to propagate all data to all members synchronously) but also use a lot of in-memory data. That is why it’s not very common to increase the backup-count. For the simplicity of this post, let’s say that we’ll always keep its value as 1.

High Availability on Kubernetes

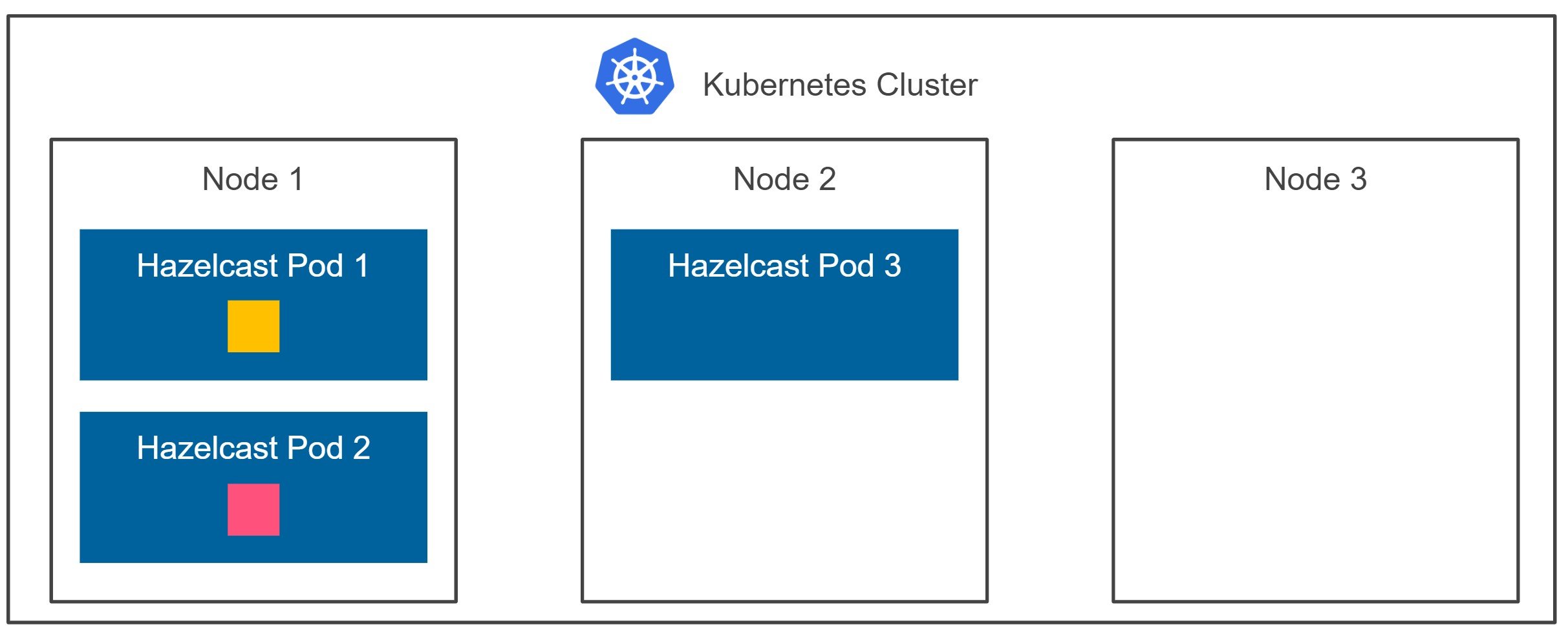

Let’s move the terminology from the previous section to Kubernetes. We’re confident that if one Hazelcast pod fails, we don’t experience any data loss. So far, so good. It sounds like we are highly available, right? Well… yes and no. Let’s look at the diagram below.

Kubernetes may schedule two of your Hazelcast member pods to the same node, as presented in the diagram. Now, if node 1 crashes, we experience data loss. That’s because both the data partition and the data partition backup are effectively stored on the same machine. How do you think I could solve this problem?

Luckily, Kubernetes is quite flexible, so we may ask it to schedule each pod on a different node. Starting from Kubernetes 1.16, you can achieve it by defining Pod Topology Spread Constraints.

Let’s assume you want to run a 6-members Hazelcast cluster on a 3 nodes Kubernetes cluster.

$ kubectl get nodes NAME STATUS ROLES AGE VERSION my-cluster-default-pool-17b544bc-4467 Ready <none> 31m v1.24.8-gke.2000 my-cluster-default-pool-17b544bc-cb58 Ready <none> 31m v1.24.8-gke.2000 my-cluster-default-pool-17b544bc-38js Ready <none> 31m v1.24.8-gke.2000

Now you can start the installation of the cluster using Helm.

$ helm install hz-hazelcast hazelcast/hazelcast -f - <<EOF

hazelcast:

yaml:

hazelcast:

partition-group:

enabled: true

group-type: NODE_AWARE

cluster:

memberCount: 6

topologySpreadConstraints:

- maxSkew: 1

topologyKey: kubernetes.io/hostname

whenUnsatisfiable: ScheduleAnyway

labelSelector:

matchLabels:

"app.kubernetes.io/instance": hz-hazelcast

EOF

Let’s comment on the parameters we just used. It contains three interesting parts:

– enabling NODE_AWARE for the Hazelcast partition-group

– setting topologySpreadConstraints spreads all the Hazelcast pods among the nodes.

Now you can see that the six pods are equally spread among the nodes.

$ kubectl get po -owide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES hz-hazelcast-0 1/1 Running 0 15m 10.101.1.5 my-cluster-default-pool-17b544bc-4467 <none> <none> hz-hazelcast-1 1/1 Running 0 15m 10.101.5.6 my-cluster-default-pool-17b544bc-cb58 <none> <none> hz-hazelcast-2 1/1 Running 0 14m 10.101.1.6 my-cluster-default-pool-17b544bc-38js <none> <none> hz-hazelcast-3 1/1 Running 0 13m 10.101.6.1 my-cluster-default-pool-17b544bc-4467 <none> <none> hz-hazelcast-4 1/1 Running 0 12m 10.101.2.5 my-cluster-default-pool-17b544bc-cb58 <none> <none> hz-hazelcast-5 1/1 Running 0 12m 10.101.6.5 my-cluster-default-pool-17b544bc-38js <none> <none>

Note that with such a configuration, your Hazelcast member counts must be multiple of node count; otherwise, it won’t be possible to distribute the backups between your cluster members equally.

All in all, with some additional effort, we achieved high availability on Kubernetes. So, let’s see what happens next.

Multi-zone High Availability on Kubernetes

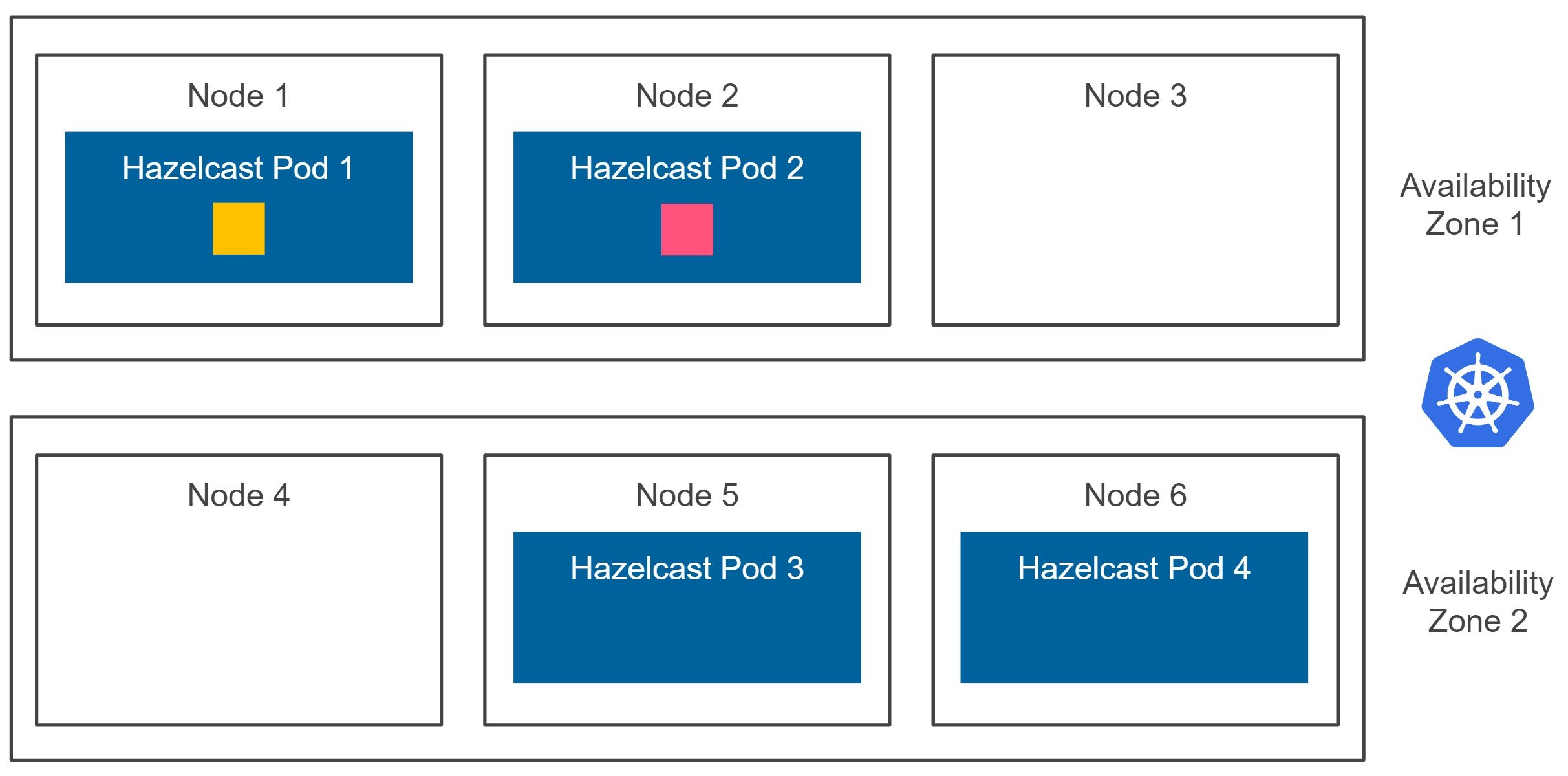

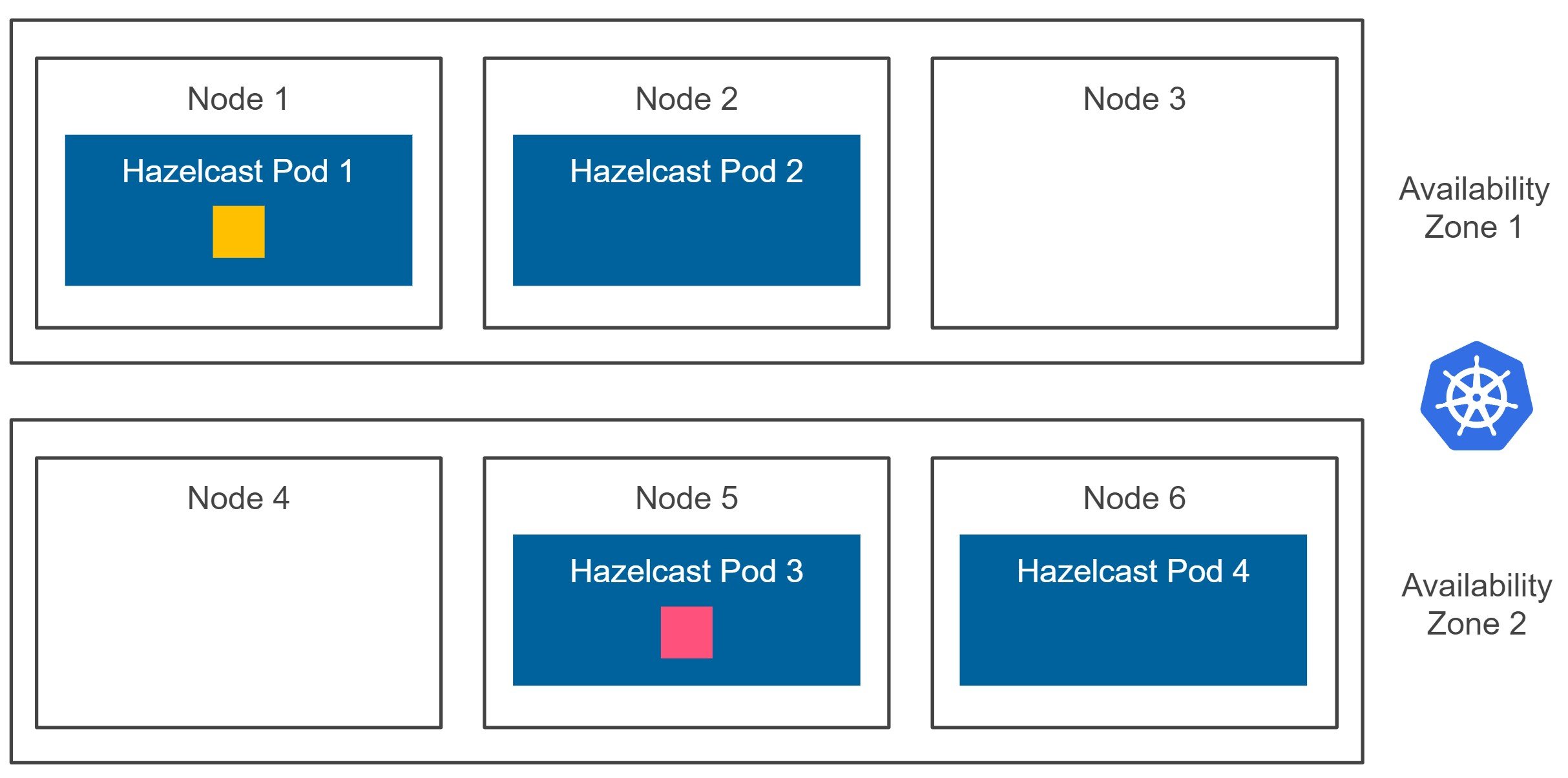

We’re sure that if any of the Kubernetes nodes fail, we don’t lose any data. However, what happens if the whole availability zone fails? First, let’s look at the diagram below.

Kubernetes cluster can be deployed in one or many availability zones. Usually, for the production environments, we should avoid having just one single availability zone because any zone failure would result in the downtime of our system. If you use the Google Cloud Platform, you can start a multi-zone Kubernetes cluster with one click (or one command). On AWS, you can easily install it with kops, and Azure offers multi-zone Kubernetes service as part of AKS (Azure Kubernetes Service). Now, when you look at the diagram above, what happens if the availability zone 1 is down? We experience data loss because both the data partition and the data partition backup are effectively stored inside the same zone.

Luckily, Hazelcast offers the ZONE_AWARE functionality, which forces Hazelcast members to store the given data partition backup inside a member located in a different availability zone. Having the ZONE_AWARE feature enabled, we end up with the following diagram.

Let me stress it again. Hazelcast guarantees that the data partition backup is stored in a different availability zone. So, even if the whole Kubernetes availability zone is down (and all related Hazelcast members are terminated), we won’t experience any data loss. That is what should be called the real high availability on Kubernetes! And you should always go ahead and configure Hazelcast in that manner. How to do it? Let’s now look into the configuration details.

Hazelcast ZONE_AWARE Kubernetes Configuration

One of the requirements for the Hazelcast ZONE_AWARE feature is to set an equal number of members in each availability zone. You can as well achieve it by defining Pod Topology Spread Constraints.

Let’s assume the cluster of 6 nodes with the availability zones named us-central1-a and us-central1-b.

$ kubectl get nodes --show-labels

NAME STATUS ROLES AGE VERSION LABELS

my-cluster-default-pool-28c6d4c5-3559 Ready <none> 31m v1.24.8-gke.2000 <...>,topology.kubernetes.io/zone=us-central1-a

my-cluster-default-pool-28c6d4c5-ks35 Ready <none> 31m v1.24.8-gke.2000 <...>,topology.kubernetes.io/zone=us-central1-a

my-cluster-default-pool-28c6d4c5-ljsr Ready <none> 31m v1.24.8-gke.2000 <...>,topology.kubernetes.io/zone=us-central1-a

my-cluster-default-pool-654dbc0c-9k3r Ready <none> 31m v1.24.8-gke.2000 <...>,topology.kubernetes.io/zone=us-central1-b

my-cluster-default-pool-654dbc0c-g809 Ready <none> 31m v1.24.8-gke.2000 <...>,topology.kubernetes.io/zone=us-central1-b

my-cluster-default-pool-654dbc0c-s9s2 Ready <none> 31m v1.24.8-gke.2000 <...>,topology.kubernetes.io/zone=us-central1-b

Now you can start the installation of the cluster using Helm.

$ helm install hz-hazelcast hazelcast/hazelcast -f - <<EOF

hazelcast:

yaml:

hazelcast:

partition-group:

enabled: true

group-type: ZONE_AWARE

cluster:

memberCount: 6

topologySpreadConstraints:

- maxSkew: 1

topologyKey: topology.kubernetes.io/zone

whenUnsatisfiable: ScheduleAnyway

labelSelector:

matchLabels:

"app.kubernetes.io/instance": hz-hazelcast

EOF

The configuration is similar to one seen before, with small differences:

– enabling ZONE_AWARE for the Hazelcast partition-group

– setting topologySpreadConstraints spreads all the Hazelcast pods among the availability zones.

Now you can see that the six pods are equally spread among the nodes in two availability zones, and they all form a cluster.

$ kubectl get po -owide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES hz-hazelcast-0 1/1 Running 0 20m 10.108.7.5 my-cluster-default-pool-28c6d4c5-3559 <none> <none> hz-hazelcast-1 1/1 Running 0 19m 10.108.6.4 my-cluster-default-pool-654dbc0c-9k3r <none> <none> hz-hazelcast-2 1/1 Running 0 19m 10.108.0.4 my-cluster-default-pool-28c6d4c5-ks35 <none> <none> hz-hazelcast-3 1/1 Running 0 18m 10.108.1.5 my-cluster-default-pool-654dbc0c-g809 <none> <none> hz-hazelcast-4 1/1 Running 0 17m 10.108.6.5 my-cluster-default-pool-28c6d4c5-ljsr <none> <none> hz-hazelcast-5 1/1 Running 0 16m 10.108.2.6 my-cluster-default-pool-654dbc0c-s9s2 <none> <none>

$ kubectl logs hz-hazelcast-0

...

Members {size:6, ver:6} [

Member [10.108.7.5]:5701 - a78c6b6b-122d-4cd6-8026-a0ff0ee97d0b this

Member [10.108.6.4]:5701 - 560548cf-eea5-4f07-82aa-1df2d63a4a47

Member [10.108.0.4]:5701 - fa5f89a4-ee84-4b4e-993a-3b0d88284826

Member [10.108.1.5]:5701 - 3ecb97bd-b1ea-4f46-b7f0-d649577c1a92

Member [10.108.6.5]:5701 - d2620d61-bba6-4865-b6a6-9b7a417d7c49

Member [10.108.2.6]:5701 - 1cbef695-6b5d-466b-93c4-5ec36c69ec9b

]

...

What we just deployed is a Hazelcast cluster resilient to Kubernetes zone failures. Just to add, if you want to have your cluster deployed on more zones with the same Helm installation, please don’t hesitate to let me know. Note, however, that it won’t mean that if 2 zones fail simultaneously, you don’t lose data. Hazelcast guarantees that the data partition backup is stored in the member, which is always located in a different availability zone.

Hazelcast Platform Operator

With the Hazelcast Platform Operator, you can achieve the same effect much more easily. All you need is to apply the Hazelcast CR with the highAvailabilityMode parameter set to NODE to achieve resilience against node failures.

$ kubectl apply -f - <<EOF apiVersion: hazelcast.com/v1alpha1 kind: Hazelcast metadata: name: hz-hazelcast spec: clusterSize: 6 highAvailabilityMode: NODE EOF

Or if you have a multi-zone cluster and you want to have a cluster that is resilient against zone failures, you can use the highAvailabilityMode set to ZONE.

$ kubectl apply -f - <<EOF apiVersion: hazelcast.com/v1alpha1 kind: Hazelcast metadata: name: hz-hazelcast spec: clusterSize: 6 highAvailabilityMode: ZONE EOF

And the Operator will configure both the partition-group and the topologySpreadConstraints to guarantee the needed level of high availability.

Hazelcast Cloud

Last but not least, Hazelcast multi-zone deployments will soon be available in the managed version of Hazelcast. You can check it at cloud.hazelcast.com. By ticking multiple zones in the web console, you can enable the multi-zone high availability level for the Hazelcast deployment. It’s no great secret that while implementing Hazelcast Cloud internally, we used the same strategy described above.

Conclusion

In the cloud era, multi-zone high availability usually becomes a must. Zone failures happen, and we’re no longer safe just by having our services on different machines. That is why any production-ready deployment of Kubernetes should be regional in scope and not only zonal. The same applies to Hazelcast. Enabling ZONE_AWARE is highly recommended, especially because Hazelcast is often used as a stateful backbone for stateless services. If your Kubernetes cluster is deployed only in one availability zone, please at least make sure Hazelcast partition backups are always effectively placed on a different Kubernetes node. Otherwise, your system is not highly available, and any machine failure may result in data loss.