The One Beam to Rule Them All

Unbounded, unordered, global-scale datasets are increasingly common in day-to-day business – IoT sensor network data streams, mobile usage statistics, large scale monitoring, the list is endless. Numerous applications seek the ability to quickly react to dynamic streaming data, as it is either a mandatory requirement or a competitive advantage.

API Churn

As a consequence, lots of companies see stream processing as a valid technology, however many consider it still to be evolving too fast. The programming interface of Spark changed 3 times over the last 5 years (from low-level RDDs to something similar to database tables) with Flink and Kafka experiencing similar issues in their own flavours. It’s not just about the API, it’s about the whole programming model (how to reason about streaming data).

At Hazelcast, we take API stability seriously. We only released our GA version of Jet earlier this year after we were certain we had a stable API which would remain stable through the minor release 3.x version range.

API Standardisation

This is what Apache Beam addresses. It defines concepts for unified batch and stream processing, concepts that should last. Even its name is a combination of these two words: Batch + strEAM. It is an evolution of the Dataflow model created by Google.

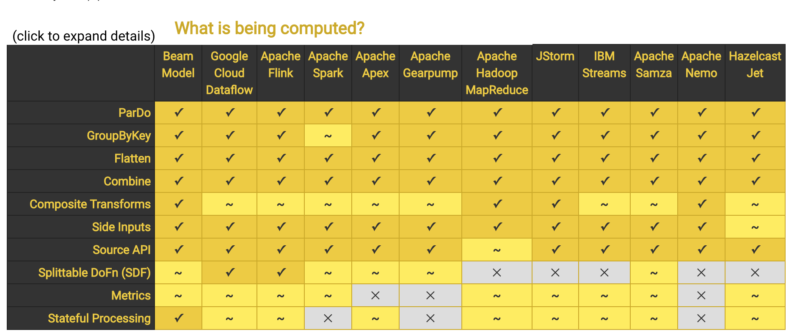

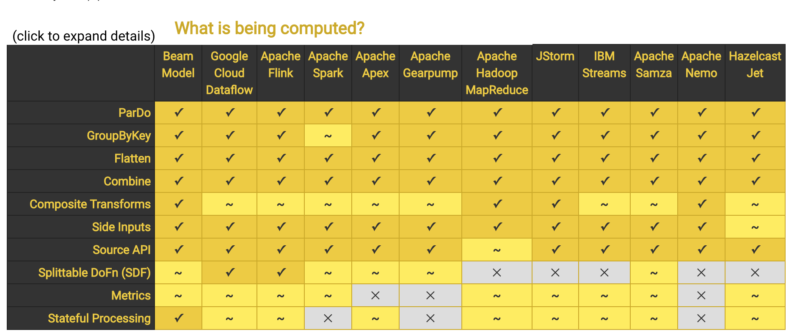

Using Beam should prevent vendor lock-in and provides SDKs in multiple languages for defining stream processing pipelines. It supports Java, Python, Go and various DSLs like SQL querying of Beam data structures. Pipeline definitions are then executed by one of Beam’s supported distributed processing back-ends, called Runners. The Runners translate the data processing pipeline into a form compatible with a specific back-end.

Developers only need to learn the Beam API and their programs should run without modifications on any of the supported backends. The choice of the back-end should be only one of performance and resource consumption. Switching overhead from one stream processing framework to another should be minimal to none.

Everything comes at a cost though. Beam is a generalisation and integration layer. It will be always behind stream processing framework feature-wise. The integration layer brings complexity and prevents low-level optimisations, so performance suffers.

Despite the costs, however, the idea is sound and the objective is worthwhile. In January 2017, Beam got promoted as a Top-Level Apache Software Foundation project. It was an important milestone that validated the value of the project, the legitimacy of its community, and heralded its growing adoption. In the past year, Apache Beam has experienced tremendous momentum, with significant growth in both its community and feature set.

Announcing Hazelcast Jet Beam Runner

As of May 2019, Hazelcast Jet has become the latest runner for Apache Beam. Even though we too have to pay the costs of a generalisation wrapper, this step is an important validation of our past work. It is proof of Jet’s maturity and feature richness.

Since that release, we are continuing our optimization of the Apache Beam Runner in order to bring performance close to the native capabilities of Jet.

It is worth pointing out how well Jet fits with Beam’s take on things at the conceptual level. One of the main reasons Apache Beam is lauded is the fundamental shift of approach that it initiated. It has stopped trying to groom unbounded datasets into finite pools of information that eventually become complete. Instead, it started to live and breathe under the assumption that we will never know if or when we have seen all of our data, only that as new data will arrive, old data may be retracted. The only way to make this problem tractable is to allow the practitioner the choice of the appropriate trade-offs along the axes of interest: correctness, latency and cost. In Jet, these things aren’t an innovation; they are the norm.

Consumers of the global datasets dealt with by streaming have evolved sophisticated requirements, such as event-time ordering and windowing features of the data themselves, in addition to an insatiable hunger for faster answers. Beam is an innovative solution that offers all of these and despite that, when writing the Jet-based Runner, we have never come across anything that wasn’t also in or incompatible with Jet on a fundamental level.

The value the Jet Runner brings to Beam is a next-generation option for its users, one more back-end alternative which is the best choice for some of their use cases. For example, once optimized, the Jet Runner could become a low footprint Runner due to it using cooperative multithreading (similar to green threads). Due to being part of the Beam Runner family, users can now find out about Jet more easily, more people will be able to identify Jet as the perfect solution to their specific problem and ultimately this is a huge gain for Jet too. Everybody wins.

Major kudos to Hazelcast engineer, Jozsef Bartok, for driving this initiative and co-authoring this post.