What Is Real-Time Machine Learning?

Real-Time Machine Learning is the process of training a machine learning model by running live data through it, to continuously improve the model. This is in contrast to “traditional” machine learning, in which a data scientist builds the model with a batch of historical testing data in an offline mode.

Real-time machine learning is useful in scenarios when there is not enough data available upfront for training, and in cases where data needs to adapt to new patterns. For example, consumer tastes and preferences change over time, and an evolving, machine-learning-based product recommendation engine can adjust to those changes without a separate retraining effort. Therefore, real-time machine learning can provide a more immediate level of accuracy for companies and their customers by recognizing new patterns and adapting to reflect those.

Machine Learning Deployment Architecture

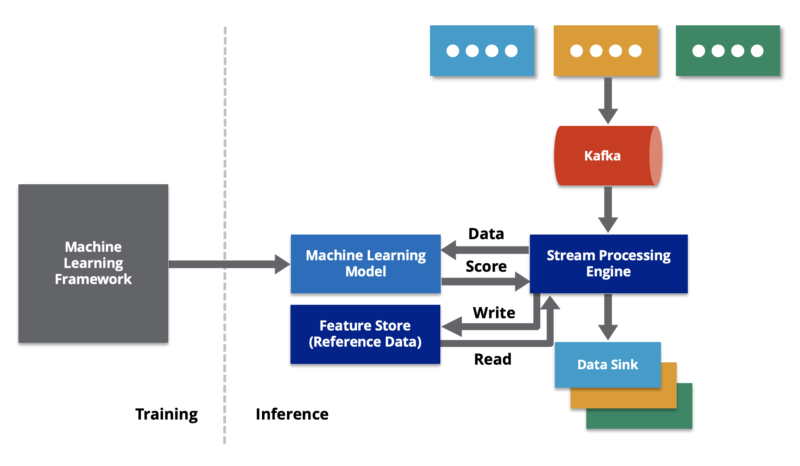

A common way to deploy a real-time machine learning model to production is in an event-driven architecture, in which a data stream (i.e., a continuous flow of incoming data) is fed into the model. The processing pipeline for that data stream handles any data transformations and enrichment that make the data ready for input into the model. At the same time, the data pipeline also modifies the model (as well as the reference data set that the model uses) using the live data.

An important component of the real-time architecture is the data store that holds reference data that is continually updated based on the new data points in the stream. This is known as the feature store, and it holds the data that trains the model. In real-time machine learning deployments, especially ones with high rates of input data, this feature store must be very fast with extremely low latency, and therefore is ideally powered by in-memory technologies.

What Is Online Machine Learning?

Online machine learning is another way of describing real-time machine learning. The term implies that models learn as they run against new data while “online,” as opposed to learning on batches of training data.