The Power of the Hazelcast Community: A Recap of the Real-Time Stream Processing Unconference

Earlier this month, Hazelcast hosted the Real-Time Stream Processing Unconference, a peer-to-peer event focused on trends in real-time analytics, the future of stream processing and getting started with “real-time”. The hybrid event was hosted at London’s famous Code Node and streamed via LinkedIn, YouTube and Twitch.

The Real-Time Stream Processing Workshop

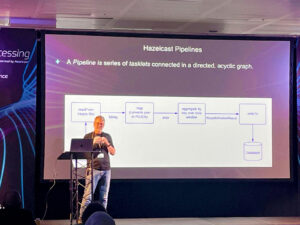

The one benefit of attending in person was the pre-Unconference workshop on real-time stream processing led by Randy May. During this session, participants learned to build solutions that react to events in real time and how to deploy their solutions to the Hazelcast Viridian Serverless. More specifically, participants walked away with a number of new skills, including:

- Conceptual foundations needed to successfully implement stream processing solutions, such as the basic elements of a Pipeline.

- How to build a real-time stream processing solution.

- Deploying and scaling the solution using Hazelcast Viridian Serverless.

Everyone at the Stream Processing Fundamentals Workshop earned a digital badge highlighting their new stream-processing skills on LinkedIn and during job interviews. Based on the early feedback, it’s safe to say we’re looking forward to more of these workshops in the future!

The Real-Time Community

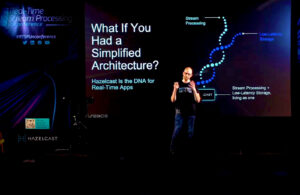

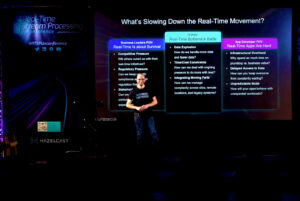

Moving on to the actual Unconference, I had the Hazelcast presented the Real-Time Community, a community that is driven by its members to create and share use cases and solutions for real-time stream processing applications. The section covered what’s slowing down the real-time trend, what’s the DNA for real-time stream processing and how to simplify the software architecture. The takeaways from the previous section are to make the community feel at home, to build a community-based portal and to focus on the importance of the community by listening to the community feedback and act accordingly.

Since I joined Hazelcast, I’ve spent a lot of time speaking with members of the community at meetups, industry events and through our Slack Community. As this was my first time broadly addressing the community, I wanted to open the event by sharing what I learned through those conversations and the vision for the Real-Time Community, a new initiative within the company. The TL;DR version:

- Community feels at home

- Build a community-based portal

- Listen to the community and act accordingly

Finally, I wrapped up the opening session with an open, honest conversation on “real-time” and Hazelcast’s perspective on the rapidly growing topic. The section covered the confusion surrounding the trend, what’s the DNA for real-time stream processing and simplifying the software architecture.

The Real Fun of Real-Time

From there the real fun got started with a look at the future of real-time stream processing. From where data is processed, to taking action in real-time to understanding where and when to enrich data with the historical context, this section had a lot of information in it, so here is your TL;DR summary:

- The new horizon for the data-driven enterprise of 2025

- This horizon impacts all apps

- Getting started begins with deploying a high-value, real-time stream processing engine that enriches new data with the historical context of stored data.

The Real-time Stream Processing Roundtable Discussion

The Unconference wrapped up with a roundtable discussion between real-time experts from all walks of life – daily practitioners, industry partners, academia and of course, the open source community. Among the topics discussed between the panelists and audience, included: partners, community members, academia, and open-source and paid users to cover the topics of current trends of real-time stream processing; challenges of real-time stream processing; benchmarking real-time stream processing applications.

The inaugural Unconference was an amazing success and I’d like to thank everyone that attended in person and virtually. I’d also like to congratulate the newest Hazelcast Hero Martin W. Kirst for his valuable contribution to our community. During the day, Martin works for Oliver Wyman, and at night he patrols the community offering bits of wisdom and amazing contributions.

A special thank you – from all of Hazelcast – to the entire presenters for being gracious with their time and making it a success:

– Tim Spann 🥑 : Tim is a Principal Developer Advocate in Data In Motion for Cloudera.

– Dr. Guenter Hesse: Dr. Hesse drives the group data integration platform (GRIP) at Volkswagen AG as Business Partner Manager and Cloud Architect. He created a new benchmark for comparing data stream processing architectures. He proved the function of his benchmark by comparing three different stream processing systems, Hazelcast Jet being one of them.

– Mateusz Kotlarz: Mateusz is a software developer BNP Paribas Bank Polska with around 9 years of experience. For the last 6 years he’s focused primarily on integration projects using Hazelcast technology for one of biggest banks in Poland.

– Vishesh Ruparelia: Vishesh is a software engineer, currently at AppDynamics, with a keen interest in data processing and storage. With a background in computer science, he actively seeks new opportunities to expand his knowledge and experience in the field. Vishesh has been exploring stream processing capabilities lately to gain a deeper understanding of their potential applications.

– From Hazelcast: Manish Devgan, Chief Product Officer. Karthic Thope, Head of Cloud Engineering and Avtar Raikmo, Head of Platform Engineering, Randy May, Industrial Solutions Advocate.

Finally, make sure to follow and join the community to stay up-to-date on the latest, including future community events:

– Slack: https://slack.hazelcast.com/

– Twitch: https://www.twitch.tv/thehazelcast

– Watch the event: https://www.youtube.com/watch?v=GncQz8HZr68