Five Reasons to Upgrade to Hazelcast Enterprise

There are many data processing solutions out there, so how do you choose the right one for your organization? In this article, we’ll explain how Hazelcast Enterprise works and the top five reasons for implementing our platform – and the risks if you don’t.

Before we dive into the platform itself, let’s put things into context with a few big trends shaping the market. Perhaps the biggest is digital transformation, a descriptor for the collection of activities that represent big changes in how businesses pursue their data strategies, to enable them to use data more effectively.

As more organizations explore the notion of digital transformation, they are increasingly turning to the public cloud to boost agility and increase automation via artificial intelligence and machine learning. We’re seeing application architectures evolve as customers utilize microservices as a more agile and simpler way to deploy business systems. And underpinning all these digital initiatives is data, or rather the need to harness these growing volumes of data and support data-driven success.

Typically, data is processed in batches based on a schedule or a predefined threshold – but with digitization and ballooning data volumes, this approach is falling short. Enter stream processing, a practice that takes action on a series of data as it’s created, allowing applications to respond to data events at the precise moment they occur. These streams-based systems augment digital initiatives across all industries.

In a technology context, you might think of performance as synonymous with speed. But it’s more than that. After all, you can’t compromise on other dimensions for the sake of speed. A fast system with a high risk of downtime is not doing anyone any favors. That’s why you must consider performance a combination of low latency, scalability, availability, reliability, and security. From a customer perspective, this means:

- Responsiveness – the ability of the system to take action quickly

- Dependability – the ability of a system to keep running and take corrective actions, and

- Privacy – the ability to allow only authorized users to access data

Hazelcast Enterprise Capabilities

All of the demands above tie into performance, and this is where the Hazelcast Platform – Enterprise Edition truly delivers:

- Business continuity for availability and reliability

- Security is about reducing the effort to protect your data

- High-density memory to handle scale

- Lower maintenance downtime is about availability

- The Hazelcast Management Center

Business Continuity Capabilities

Our business continuity capabilities start with our WAN Replication feature. It copies your data – or a subset of it – in an efficient and incremental way to a separate remote cluster for the purposes of disaster recovery, or even for geographic distribution to enable lower latency.

So, Hazelcast Enterprise supports any replication topology. A common topology is one-way replication, or active/passive replication, to enable a traditional disaster recovery strategy. Or you can have two-way replication known as active/active replication to allow sharing of the same data across different user groups that are geographically dispersed, while also supporting disaster recovery needs. As part of this replication capability, the system only sends data that has changed. This ensures minimal bandwidth usage for replication, while keeping a low recovery point objective (RPO); i.e., the estimated amount of data you could potentially lose should a site-wide disaster occur on your primary cluster. Of course, you don’t want to lose any data, so you want the RPO to be as small as possible, but at the same time, you don’t want to spend a ton of money to create that guarantee. Trying to achieve a low RPO is difficult to do on your own, so Hazelcast has provisions to reduce the risk of data loss as much as possible, cost-effectively.

The other parameter of disaster recovery is the recovery time objective (RTO); the amount of time it takes to get users back up and running. Since you have a complete copy of your primary cluster from replication – perhaps minus the last set of updates – the problem of having to recover the data is solved.

But the main issue is to get applications to quickly and automatically connect to the backup cluster, and that’s where our automatic disaster recovery failover mechanism fits in. Applications built on Hazelcast client libraries can recognize that the primary cluster is down and will automatically re-route connections to the specified backup cluster, or clusters, to ensure minimal downtime.

Security Capabilities

Hazelcast provides a comprehensive list of security capabilities grouped by data privacy, authentication, and authorization.

In many cases, security impacts other system characteristics, such as performance. If security is applied, things tend to slow down due to the extra processing. This is the necessary trade-off you make as part of a security strategy. This expected trade-off is one reason why IT professionals defer the inclusion of security controls early in a project lifecycle. But with Hazelcast Enterprise, you get minimal performance disruption while gaining the necessary data protection from day one.

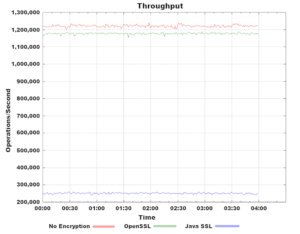

When building security into Hazelcast Enterprise, we ensured that our over-the-wire encryption had minimal impact on data transmission. Below are some benchmark results – the red line is the operations per second of Hazelcast without any over-the-wire encryption. The green line represents Hazelcast with OpenSSL for over-the-wire encryption. This shows that the operations per second are slightly slower than the unencrypted data line; roughly in the range of about 1.2 million operations per second as part of this benchmark. Then there is the comparison against the Java SSL engine, which is the purple line. There is quite a difference between using the SSL engine for encryption, and encouraging a fairly significant degradation in performance – whereas by using SSL with Hazelcast, you do not incur that much degradation.

Minimal performance impact using Hazelcast Enterprise with OpenSSL (green line)

versus no encryption (red line) and Java SSL (blue line).

For data privacy, Hazelcast Enterprise allows customers to plug in their symmetric encryption. This means you can use symmetric encryption to encrypt your data between nodes and clients as an alternative to TLS. If there are situations where you need an extra level of security for transmitting data, then this option will undoubtedly work for you.

Another important capability when authenticating a client with a cluster is the ability to support mutual authentication where each side has a certificate to verify who they are. Not only does a cluster know precisely who’s connecting, but the client can be assured of who they’re connecting with – reducing the risk of man-in-the-middle attacks.

In the realm of authentication, we also offer a capability known as Socket Interceptor to provide an additional level of protection. This API allows you to write custom authentication mechanisms to prevent rogue processes from joining the cluster. We also support role-based access controls so you can use a system with role-based access controls to the various data types within your system, giving you protection on the different types of sensitive data you might have. And, of course, this ties in with your existing security infrastructure like LDAP or Active Directory.

The Hazelcast Security Interceptor capability is similar to the Socket Interceptor capability, but this one sits on the authorization side to protect your data from rogue clients. By plugging in custom code, you can intercept every remote operation executed by the client.

High-Density Memory Capabilities

You might know that garbage collection in Java systems can severely impact the performance and operation of the system, and you can’t practically allocate more than a few gigabytes of memory from the heap. In contrast, Hazelcast Enterprise uses an off-heap memory manager built to handle much larger blocks of RAM. This allows you to efficiently store more data in your computer’s memory, up to 200 GB per Hazelcast node, while eliminating the many garbage collection pauses you would otherwise face when allocating large blocks of memory from the Java garbage collector.

The key advantage here is that you can simplify your deployment by relying on fewer Hazelcast nodes. Instead of running hundreds of Hazelcast nodes on as many hardware servers, you can instead deploy tens of nodes on a much smaller cluster while getting a predictable level of performance that is not impacted by garbage collection.

An upcoming scalability feature you should look for is the new tiered storage capability, currently in beta. In its current form, it leverages a hybrid storage mechanism using both RAM and disk to scale more cost-effectively, while also addressing low-latency data access requirements. Future versions will integrate with other long-term storage media to enable further cost-effective scalability.

Lower Maintenance Downtime Capabilities

Every system needs some downtime for maintenance – or planned downtime – and some of these features will help you further reduce the time necessary for maintenance. One capability in this category is the Persistence feature. This capability writes your in-memory data to disk asynchronously. Should your servers shut down, the Persistence feature saves your data so you can quickly repopulate your clusters in memory storage when the servers are restarted. This enables fast recovery should an entire cluster go down either because of a large-scale failure or, more likely, because of some planned maintenance. It’s one thing to have downtime because of the actual maintenance work, but having to wait for the system to get back to the original state prior to the maintenance work represents even more downtime.

Rather than repopulating the data into memory from the original systems of record, you can read that data directly from the Persistence store on disk. Data is reloaded in seconds too. For example, take a 400 GB data set. If restored from the original data sources, it could take minutes, or even hours, versus the 82 seconds it takes for the Persistence feature to restore the system.

Blue-green deployments offer another way to ensure uptime despite the requirements around downtime for the maintenance of your clusters. For this strategy, you have your production cluster called the blue cluster and a standby cluster called the green cluster. While you are upgrading the green cluster, which is not a production cluster, it is completely standby and otherwise an exact copy of the blue cluster. You can upgrade the green cluster without client interaction, and therefore, there is no risk to any users. When you are ready to migrate within the Hazelcast Management Center, you can specify some or all the clients to redirect to that green cluster. Ultimately, it turns the previously green cluster into the new blue production cluster, and the previous blue cluster becomes the green cluster. You are essentially swapping roles through the administrative interface without having to reconfigure each individual application.

At some point in the future, you can start applying upgrades to that new green cluster which doesn’t have to be restricted to only Hazelcast software upgrades. You could upgrade your operating system, hardware, and any third-party software on a cluster. Once upgraded, you can direct clients to that now ready-to-go and tested environment. If any problems arise, the blue-green deployment ensures that you have a fallback in case any of the upgrades you made cause a problem.

Job Upgrade is a feature specifically pertaining to our stream processing engine, which allows jobs to be upgraded with a new version without data loss. This is especially good for swapping out improved machine learning models in a production environment without incurring any downtime on your continuous feed of streaming data.

When you upgrade a job, the system takes a snapshot of the current state. The old job stops, and the new job starts with one command. Then the remaining data feed is sent to the new job for processing with a starting point at that snapshot, ensuring you don’t miss any of the data in that live stream.

The Hazelcast Management Center

The Management Center interface allows you to:

- Monitor and manage your cluster members running Hazelcast, including monitoring the overall state of clusters

- Detailed analysis and browsing of data structures in real-time

- Update map configurations, and

- Take thread dumps from nodes

The Management Center also supports integration with the monitoring tool Prometheus. If you currently use Prometheus as part of your enterprise-wide monitoring system, integrating with Hazelcast Enterprise delivers a consolidated interface for monitoring all your production systems.

Conclusion

The best way to learn more about the Hazelcast Enterprise features is to try them out yourself. You can get a 30-day free trial with no obligation from our Get Hazelcast page. See how you can simplify your Hazelcast deployment by leveraging the powerful features in Hazelcast Enterprise.