10 Reasons Why Developers Use Hazelcast: A Recap of Flink Forward Conference

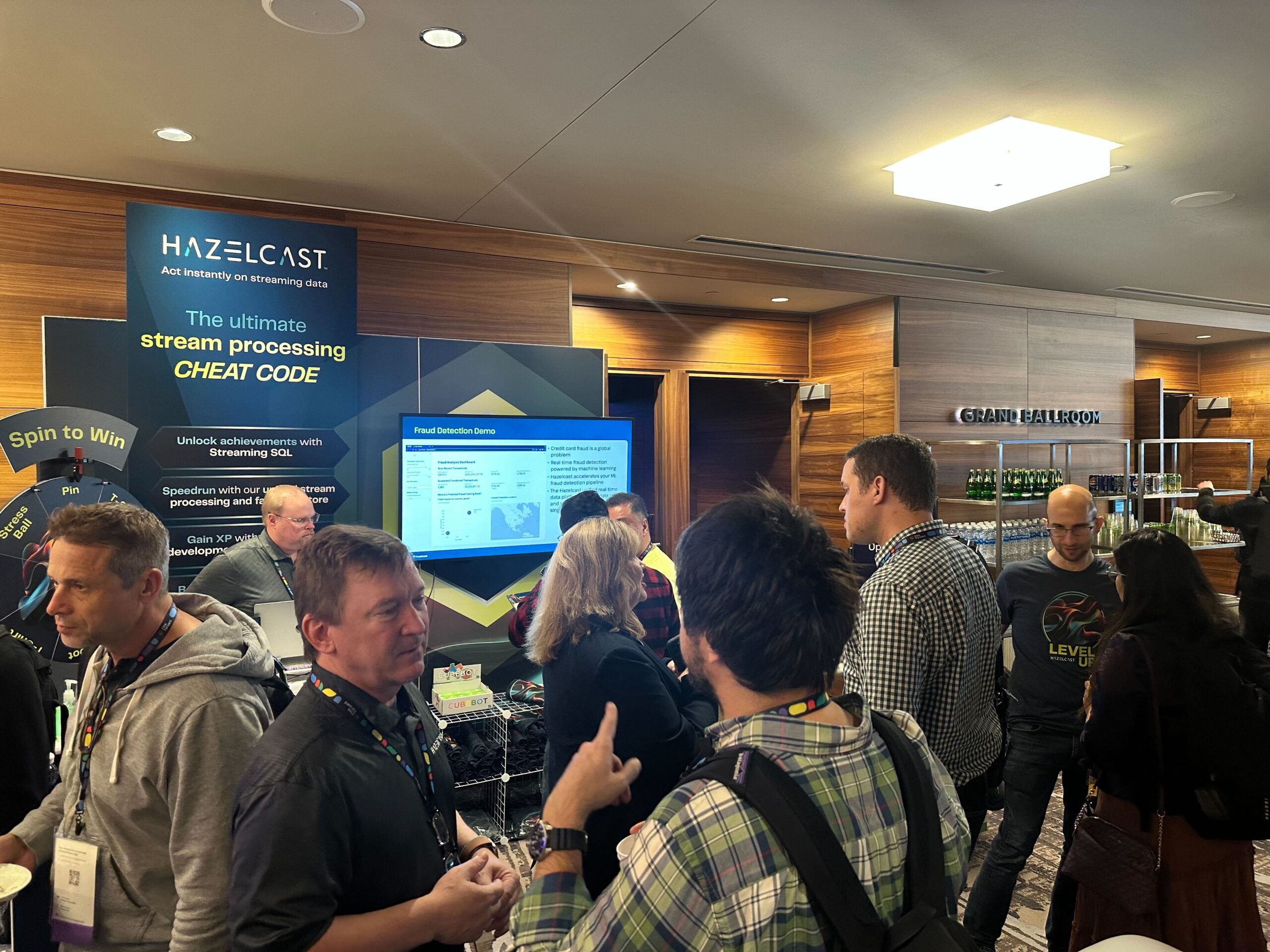

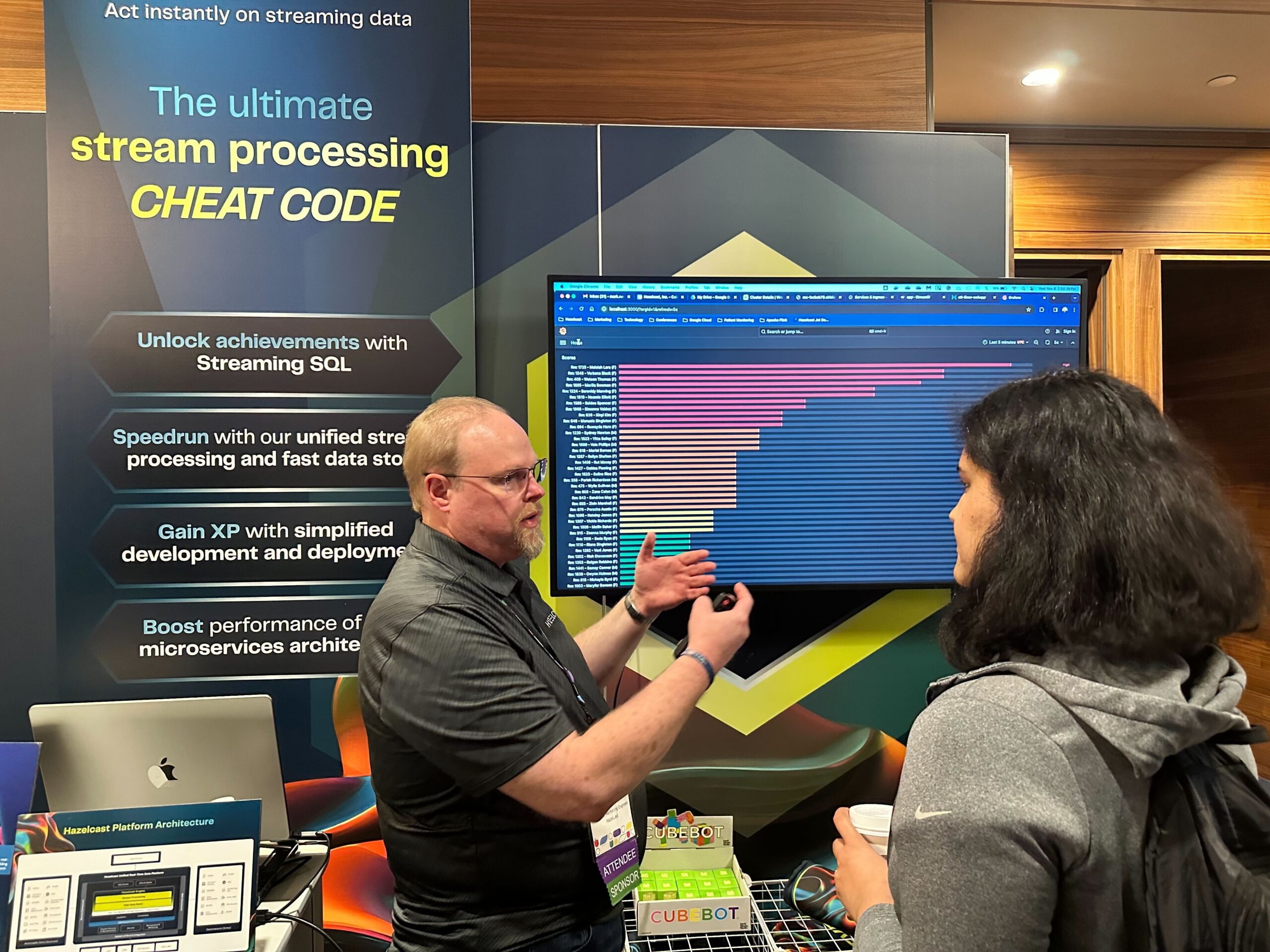

This week, Hazelcast participated in the Flink Forward in Seattle, a conference dedicated to the stream processing community – from developers to solution architects and data scientists.

Hazelcast is a unified real-time data platform for acting instantly on streaming data, offering low-latency querying, aggregation, and stateful computation against event streams and traditional data sources. Before the Flink Forward, Hazelcast presented at the Seattle Java User Group (JUG) about boosting similarity search by combining the strengths and advantages of vector databases and real-time stream processing to provide a unique developer experience and an efficient way of processing data at scale, in real-time.

Here are the top 10 reasons why developers choose Hazelcast over other stream processing and data platform technologies:

No #1. Simplicity: Hazelcast is developed in Java and relies solely on its codebase without requiring external dependencies. It provides a familiar API, mirroring the well-known Java util package and its interfaces.

- How? Start a local cluster through the Command-Line Client (CLC), Docker, Binary and Java Embedded. Head over to run a Local Cluster guide to get started.

No #2. No Job Manager, no Master Node, and no ZooKeeper: Hazelcast follows peer-to-peer architecture where every member in the cluster is treated equally, storing equivalent data, and performing comparable processing tasks.

- How? The Hazelcast cluster consists of a number of members, with each member hosting a Hazelcast instance. The data storage and computational workloads are collectively shared. Read about the Hazelcast Architecture to see how it works.

No #3. Less hardware: following the reason above, for the same task. Hazelcast uses less hardware, saving on development, maintenance, and deployment costs.

- How? Compared to other stream processing technologies. Since Hazelcast does not need a Job Manager therefore only worker nodes are needed.

No #4. Multiple clients support: you can connect to the Hazelcast cluster using Java, C++, .NET, Node.js, Go, Python, REST, CLC and Memcache.

- How? Depending on the chosen programming language, check out the relevant Hazelcast client to get started.

No #5. Distributed Data Structures: Depending on the partitioning strategies (partitioned vs. non-partitioned) and the CAP theorem (AP vs CP). Hazelcast offers a wide range of data structures.

- How? For each of the client languages, Hazelcast mimics as closely as possible the natural interface of the structure. For example in Java, the map follows java.util.Map semantics. All of these structures are available in Java, .NET, C++, Node.js, Python, and Go. Check our all Hazelcast data structures to get going.

No #6. Data Pipelines: Hazelcast allows both stream processing and batch processing data pipelines in a unified platform for stateless and stateful computations over large amounts of data with consistently low latency.

- How? By using the Hazelcast stream processing engine, you can build a multistage cascade of groupBy data pipelines and process infinite out-of-order data streams. You can create jobs and data pipelines to include data ingestion, submitting and securing jobs, and managing jobs. More on how to create a Hazelcast data pipeline.

No #7. SQL support: Hazelcast supports SQL statements and functions, and streaming SQL queries. You can use SQL to query data in maps, Kafka topics, or a variety of file systems. Results can be sent directly to the client or inserted into maps or Kafka topics.

- How? You can start with SQL over maps, SQL over Kafka, and stream processing in SQL. Check out Hazelcast SQL guides for more detail.

No #8. Rich connectors: You can integrate Hazelcast with various data systems, application frameworks, and cloud runtimes. From Spring Boot to cloud discovery to cloud PaaS, Docker, Helm Charts, Pipeline plugins and Web sessions clustering.

- How? Hazelcast hub offers various connectors to connect your applications to Hazelcast. You can review all connectors here.

No #9. Learning curve: Hazelcast has a lower learning curve, compared to other stream processing technologies, all the features mentioned above have well-documented guidelines and tutorials.

- How? Start with the Hazelcast documentation and then move to the Hazelcast training (to learn more and earn your stream processing badge). I highly recommend trying the Hazelcast tutorials.

No #10. Vibrant community: The Hazelcast community is very active and vibrant. Both online and in person. The Hazelcast community Slack and Twitch platforms offer a great place for stream processing developers and solutions architects to ask questions, interact with other members and attend our in-person events.

- How? You can join the Hazelcast community Slack and follow our Hazelcast Twitch to get notified when we go live on a variety of topics and tutorials. We also recommend signing-up our community newsletter and attend our in-person events and meetups here.

Bonus reasons:

- Performance: Learn how Hazelcast outperformed Apache Flink and Spark: The ESPBench paper from the Hasso Plattner Institute compares the performance of popular stream processors. It states, “Overall, the latency results are diverse with Hazelcast often performing best with respect to the 90%tile and mean values”. Read the paper.

- Efficiency and scale: In a cluster of 45 nodes and 720 vCPUs, Hazelcast reached 1 billion events per second at a 99% latency of 26 milliseconds. Read more here

- Hazelcast Heroes: Hazelcast Heroes play a crucial role within the Hazelcast Developer Community, enriching our ecosystem with their meaningful input. Their contributions can encompass a wide range of activities, such as introducing new features, addressing bugs, enhancing documentation, crafting informative blog posts, hosting live streams, delivering user group presentations, or extending help to fellow members in the Hazelcast Developer Community. Read more about it here.

- And many more; The above reasons are only to get you started with Hazelcast’s platform, and it has many more features and benefits.

A big thank you to everyone that attended the Seattle JUG and Flink Forward events. I had a pleasure meeting and speaking with all of you, and look forward to hearing about your experiences with stream processing.