Redis vs. Hazelcast – RadarGun Puts Them To A Challenge

Since the initial release in 2009, Redis has gained immense popularity and become one of the most deployed data storage platforms with a large community.

Despite its impressive set of features, Redis had one severe limitation – it was designed from the ground up to be used in standalone mode. Users that needed to scale beyond the capacity of a single machine had to come up with ad-hoc sharding solutions, however version 3.0.0 delivered a production ready clustering system that radically simplified a distributed Redis deployment.

While everybody agrees that Redis is fast, we will look at how Hazelcast compares to Redis. The goal of this post is to observe how the performance of clustered Redis (v. 3.0.7) matches up to that of Hazelcast (v. 3.6.1), especially under heavy load conditions.

To make sure we have stable conditions when comparing Hazelcast and Redis, we chose to make use of our in-house test lab environment, normally utilized for testing performance improvements of Hazelcast.

The Lab Setup

The tests were performed on a cluster consisting of HP ProLiant DL380 servers. Each of those equipped with a dual socket Xeon E5-2687W v3 @ 3.10GHz, 10 cores each, with HyperThreading enabled (sums up to 20 physical, 40 virtual cores) and 768GB 2133 MHz RAM (24x32GB modules). On the operating system side, we used a plain RHEL 7 installation, which basically means no virtualization software being employed in the measurement. A 40 GbE SolarFlare network adapter (Solarflare SFN7142Q Dual Port 40GbE) was used on each machine for node-to-node communication.

To execute the Redis tests, we decided to pick Jedis as the Redis client due to its popularity (3,481 stars on github) and great support for the clustered mode.

In order to exclude bias, we decided to employ a third-party measurement tool called RadarGun created by the Infinispan developer community. Since RadarGun does not provide Redis support out of the box, we had to roll our own. A RadarGun fork and the Redis plugin implementation is available at github for curious people.

What We Tested

For the test scenario, we wanted to see how Hazelcast and Redis compare to each other based on growing number of clients and concurrent threads accessing the data. All tests ran against a 4 node cluster of the testee under inspection and 4 basic test setups had to be executed:

| Scenario | Number of Clients | Number of Threads per Client |

|---|---|---|

| 1 | 1 | 1 |

| 2 | 4 | 8 |

| 3 | 4 | 32 |

| 4 | 4 | 64 |

As mentioned above we ran the test using the following versions:

- Hazelcast Version 3.6.1

- Redis Version 3.0.7, Jedis 2.8.0

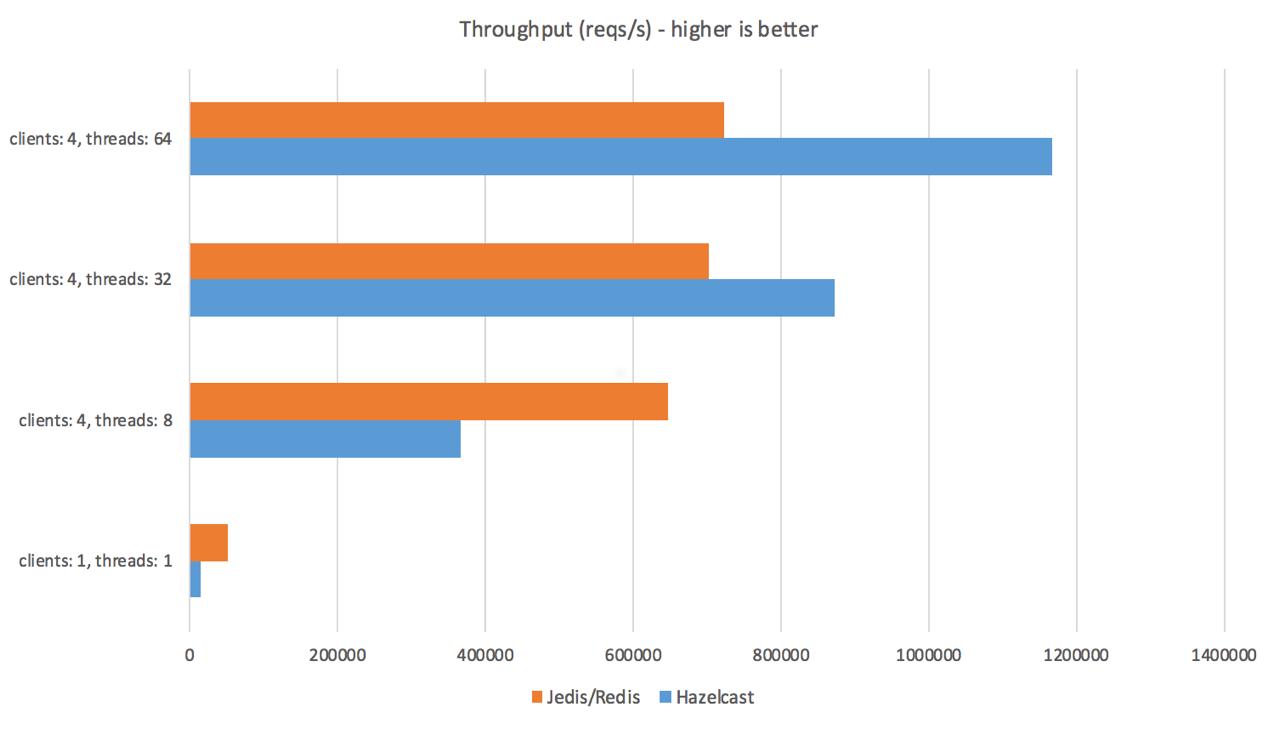

Throughput

Looking at the throughput results, Redis is extremely fast with a small number of clients and / or threads, however it becomes cumbersome under highly concurrent loads. Scaling beyond a certain number of threads seems to stall the scalability of the internal architecture of Redis. On the other hand, Hazelcast is less impressive under very small loads but scales way beyond when concurrent access and high numbers of clients or threads come into the game.

| Scenario | Hazelcast result (get reqs/sec) | Redis result (get reqs/sec) |

|---|---|---|

| 1 | 13,954 | 50,634 |

| 2 | 365,792 | 645,976 |

| 3 | 872,773 | 702,671 |

| 4 | 1,166,204 | 722,531 |

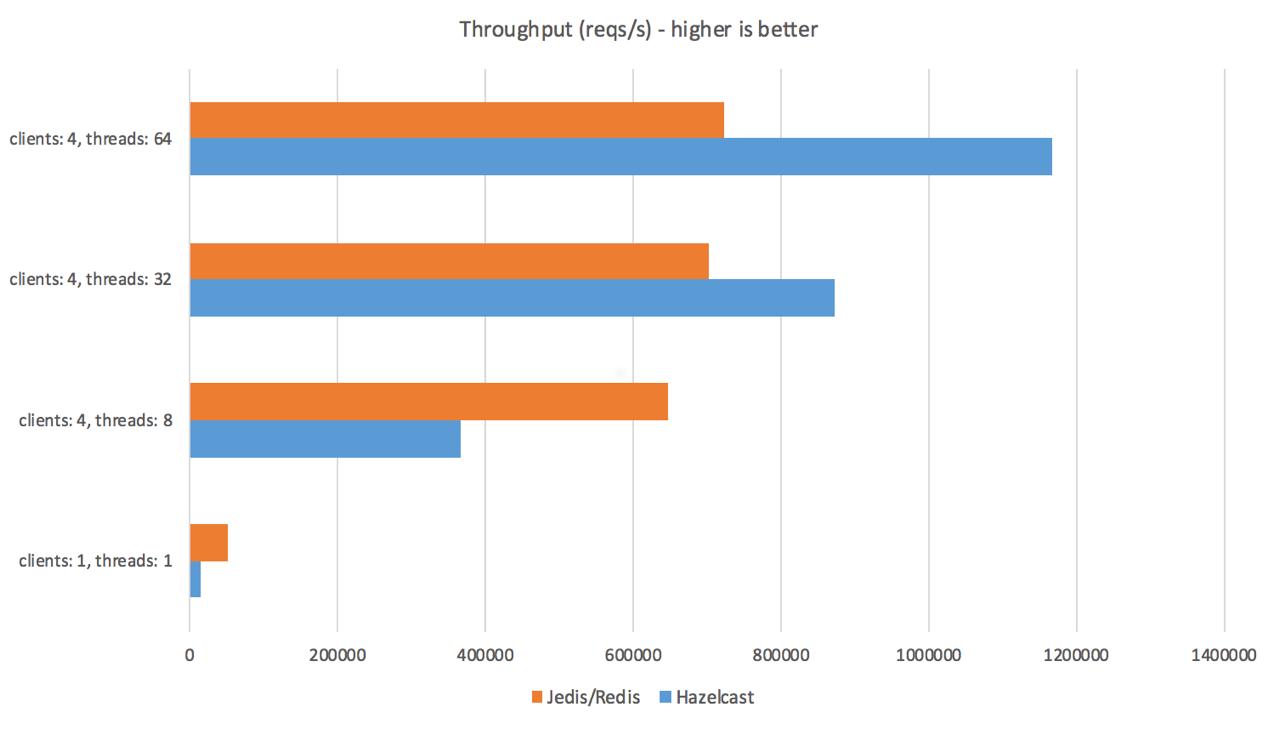

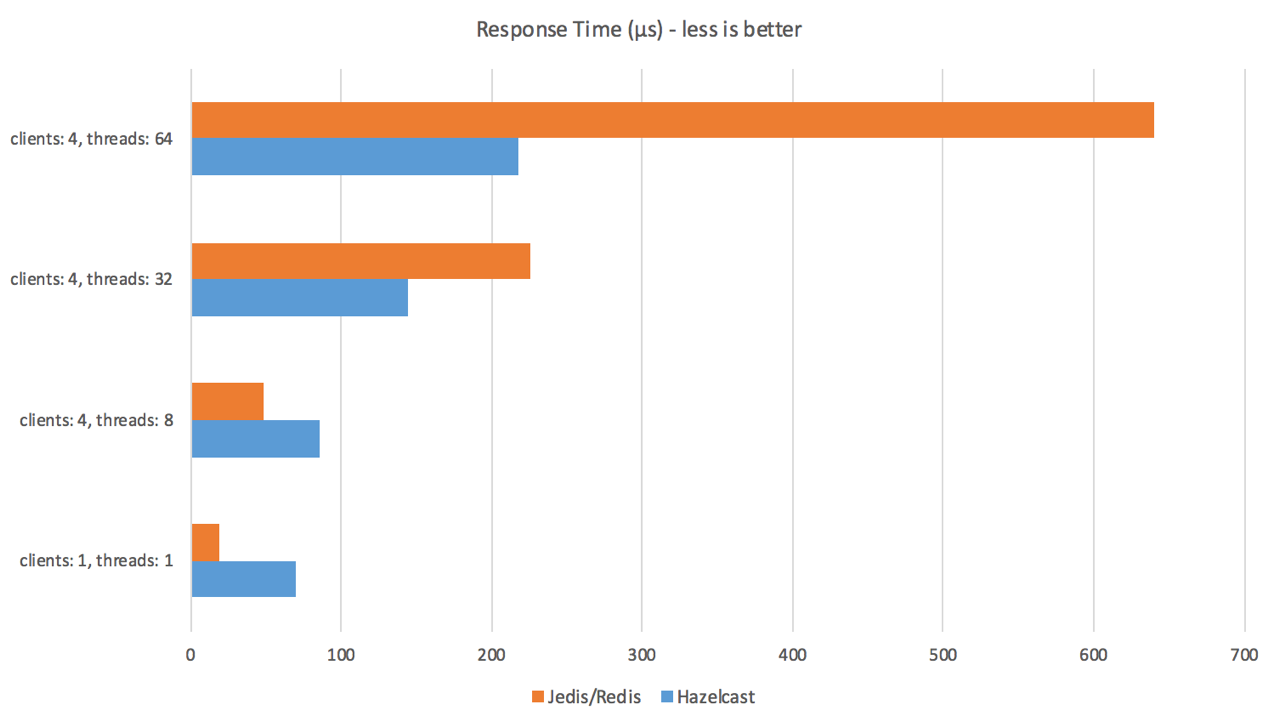

Latency

According to the results, we seem to have a common Latency performance pattern similar to the Throughput measurements. Redis, under low data load, seems to have a fairly better response time over Hazelcast which reverses when the load and concurrency of the requestors increases. Under load that is not uncommon in big environments (as given in scenario 4) we see Redis dramatically increasing the average response time. Hazelcast response times also grow relative to the number of threads but this increase seems more stable and not exponential as shown by the Redis graph.

| Scenario | Hazelcast (resp-time in μs) | Redis (resp-time in μs) |

|---|---|---|

| 1 | 70,14 | 19,37 |

| 2 | 85,83 | 48,98 |

| 3 | 144,7 | 225,22 |

| 4 | 217,52 | 640,51 |

Conclusion

Even with the variability in benchmarking that arises from testing methodology and set up tweaks, it seems fair to say that there is a common pattern here. Redis appears to be a great choice for low numbers of clients and low resource contention whereas this supremacy breaks the more load you put on the cluster.

This result seems unexpected looking at the high number of Redis users out there. Do they really have that low volumes of data load in their system or would they do better looking for an alternative? We recommend to developers and architects that they should always test their own use cases as part of their due diligence before committing to any technology.

Be sure to download the full benchmark in PDF form, and check out the new Redis for Hazelcast Users comparison whitepaper.