Hazelcast on GCP (google cloud platform) part 2

Piotr Szybicki recently published this blog post with the title, “Hazelcast on GCP (google cloud platform) part 2“. In this post, Piotr discusses the process of deploying and securing a Hazelcast IMDG cluster in GCP (Google Cloud Platform).

This is part of a multi-part series. Find part 1 here: Hazelcast on GCP (google cloud platform) part 1

So part two how to put hazelcast on GKE (Google Kubernetes Engine). First we need to do some setup. Setting project name and compute zone will save me some space in this article as I would have to add it to all the commands that I’m running.

gcloud components install kubectl

set name_of_your_project=xxx

gcloud config set compute/zone europe-west4-c

gcloud components install docker-credential-gcr

gcloud config set project %name_of_your_project%

And if you haven’t already install docker on your machine if you running on windows some links are provided below.

docker toolbox: https://docs.docker.com/toolbox/toolbox_install_windows/

docker for windows: https://docs.docker.com/docker-for-windows/install/

Put hazelcast.xml and Dockerfile (you can find them at the bottom of this page) in a same folder and run build command. That will crate a docker file with the modified hazelcast.xml.

docker build -t eu.gcr.io/%name_of_your_project%/hazelcast:v1 .

docker images

To run locally (you don’t have to do this as it will only lunch a single instance):

docker run --rm -p 5701:5701 eu.gcr.io/%name_of_your_project%/hazelcast:v1

Push the image to the remote GKE repo using push command nad verify.

docker push eu.gcr.io/%name_of_your_project%/hazelcast:v1

gcloud container images list --repository=eu.gcr.io/%name_of_your_project%

We need to update our sub-net to assign a IP range that is deterministic (this step is not necessary but i want to make a point with it), mention CIDR ranges and nodes per pod addresses. This step is based on the setup created in the previous part of this article. Notice that this time I had to specify the region, it should be the same as we typed when we set the region at the beginning. Not the address ranges separate for pods and services in the paragraph below titled do not use dns, i make a reference to these.

gcloud compute networks subnets update hazelcast-subnet --add-secondary-ranges my-hazelcast-pods=172.16.0.0/12,my-hazelcast-services=192.168.4.0/24 --region europe-west4

Let’s create the cluster and add some custom role binding to avoid errors when hazelcast will try to hit the rest endpoint for the addresses of other pods to form a cluster. Solution suggested in this post (not really elegant but I will write another article about this topic, and to keep things to minimum I settle for default where I could):

GCE – K8s 1.8 – pods is forbidden – Cannot list pods

gcloud container clusters create hazelcast-cluster --num-nodes=3 --network=flex-app-asynch --subnetwork=hazelcast-subnet --machine-type=f1-micro --enable-cloud-logging --enable-ip-alias --cluster-secondary-range-name=my-hazelcast-pods --services-secondary-range-name=my-hazelcast-services --enable-basic-auth --issue-client-certificate --enable-autorepair --enable-autoscaling --max-nodes=3 --min-nodes=9 --tags=hazelcast-cluster

kubectl apply -f ClusterRoleBinding.yaml

I set the cheapest machine avaialbe f1-micro but if you want to go for something more expensive the list can be checked using:

gcloud compute machine-types list --filter="zone:europe-west4-c"

To run our the cluster type the following command. We set the number of replicas to 0 as without the working service the pod will not start successfully.

kubectl run hazelcast-cluster --image=eu.gcr.io/%name_of_your_project%/hazelcast:v1 --port=5701 --replicas 0

Let’s create that service. Kubernetes has 4 ways of exposing the pods IP addresses to each other or to the outside world and you can read more abouth this in this article:

Using a Service to Expose Your App

GKE has one caveat if I ware to use the standard kubectl command with the flag LoadBalancer It would assign me an external IP address and I do not want that. You’re cache should be visible only to your application. So if you open the file (Service.yaml) you can see the load-balancer-type: “internal”. It exposes the cluster to the VPC. Of course you can still run the kubectl expose command and then block that port on the firewall but having public IP that to serve internal trafic is not only in bad taste but also expensive. More on the internal load balancing:

Internal Load Balancing | Kubernetes Engine | Google Cloud

kubectl apply -f Service.yaml

kubectl scale deployment hazelcast-cluster --replicas 2

kubectl get pods --output=wide

kubectl logs hazelcast-cluster-rest_of_the_id_of_the_pod

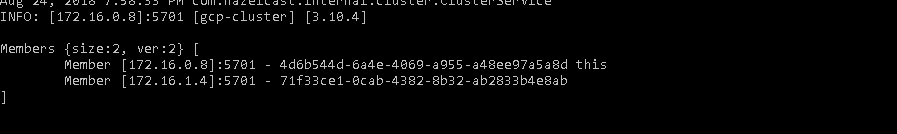

Hopefully after you set the replicas to 2 you will see this in the logs.

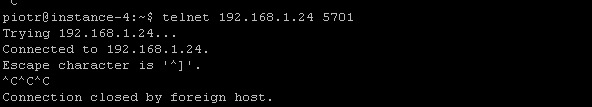

And I can access the port from different machine on the same VPC. Of course that port has to be opened in the firewall rules.

Of course clean up after yourself, so not to incur charges. Deleting the service will also get rid of deployment and service.

gcloud container clusters delete hazelcast-cluster

Do not use DNS

Configuration of the hazelcast via the XML is pretty easy. All you really have to specify is the service name and the namespace (as i want to minimize cognitive overload i kept as many settings as i could as defaults). Hazelcast will use this information to query the rest endpoint of the service for address of other pods that will form the cluster. This is why I created the initial deployment with zero replicas. There is another possibility that I was not able to get to work and that is to use service DNS. It simply always return the addresses of the services in my case hazelcast was binding to the cover by the range: 172.16.0.0/12, so the pods addresses. And the DNS service was returning addresses from the range 192.168.4.0/24. I search some forums but I was unable to find solution that would work and allow me to run multiple pods per single instance of the VM.

At the end.

There is one subject I have not touched. And I will in part 3. How to use kubernetes metrics to scale our cluster. But I have to research that subject a little further so I can talk about this intelligently.