Hazelcast Financial Use Cases

Background

The properties of an In-memory Data Grid (IMDG) such as Hazelcast lend themselves very well to solving many of the system challenges facing the financial sector today. Depending on their application in back, middle or front office, the service levels required may vary. Hazelcast provides for high system availability, easy scalability and when required, performing millions of operations a second at predictable microseconds latency. Lastly, Hazelcast’s easy to understand programming model means that development iteration times are much reduced.

The aim of this paper is to give system engineers and architects in the financial industry an idea of the types of application use cases Hazelcast is solving today in production. We’ll take a look at three of these applications and discover how they integrate into existing workflows and systems. In general terms, Hazelcast can be applied to solve OLAP and OLTP use cases. OLAP uses cases can utilize Hazelcast’s strong MapReduce, Aggregation and Distributed Compute feature sets. OLTP might make use of Hazelcast’s ability to reliably ingest millions of transactions per second and to react on these transactions with distributed eventing and computation.

Case Study 1: Market Data Management System

Traditionally, market data has been repeated and passed around many systems in investment banks. Maintaining these complex feeds of “commodity” market data between systems has a high cost and can introduce complex project management burdens that stifle innovation. It is not unusual to find multiple development teams programming feeds for the same market data into their systems. Internally managed and centralized market data services have been a long-term goal of many investment banking organizations.

The value propositions for investing in such systems are as follows:

- Provide a centralized API for use by front, middle and back-office systems to a golden source of market data.

- Control the Data Domain Model and cut down on duplication of market data across multiple systems.

- Control access to market data across the bank and have a centralized point of authorization and audit.

- A single golden source of market data allows for more predictable and accurate derived calculations.

All four propositions drive cost savings to the bank by reducing duplication of effort and controlling market data dissemination. The last point is particularly salient to organizations seeking to reduce their market data costs, especially when dealing with exchange-derived data. Exchanges can be extremely diligent in pursuing consumers who are unable to accurately audit and account for their data use. In situations where data use cannot be quantified it is known that exchanges can make punitive demands that are allowed for under most market data contracts, especially through providers such as Bloomberg and Reuters.

Many banks today still have a multitude of systems that individually consume data via Reuters and Bloomberg APIs, these systems can then pass data onto other systems at which point it becomes very hard to determine the consumers. To this end, it is essential that any market data system integrate with market data authorization and audit API such as Reuters OpenDACS. Using Hazelcast, data access can be integrated against such systems.

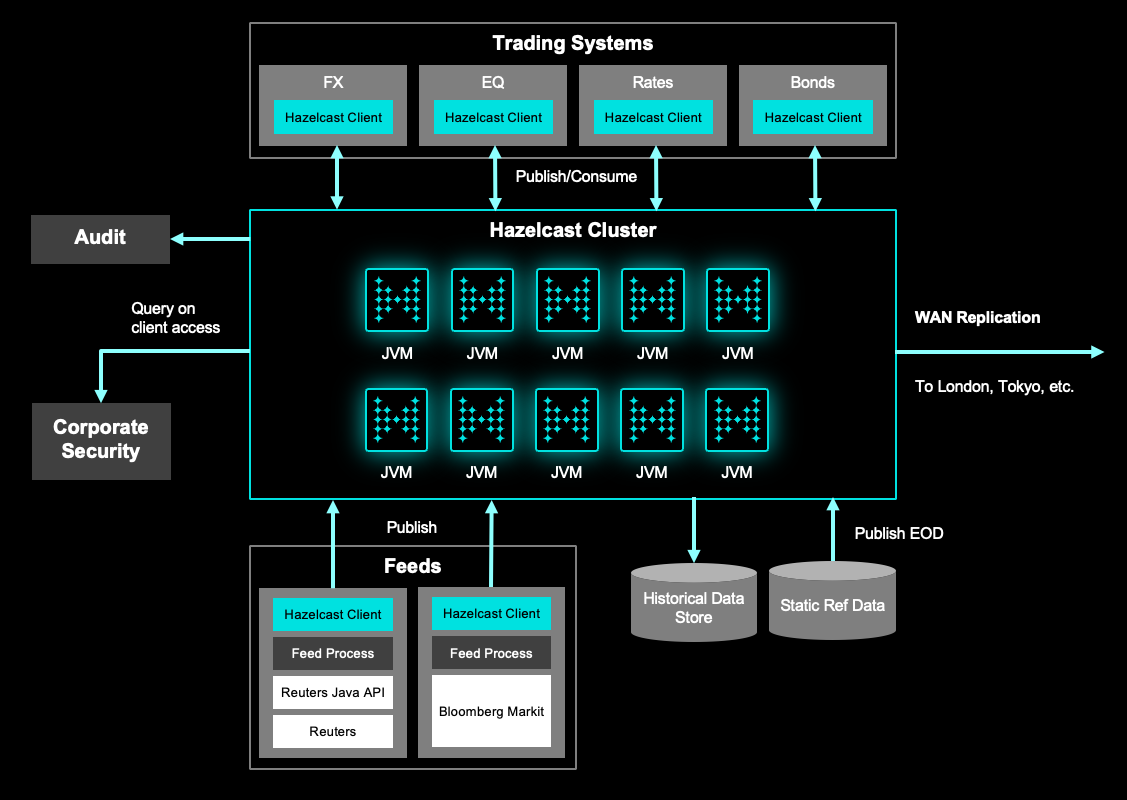

The following diagram describes a Market Data Management System using Hazelcast as its core component. In such a system high availability and predictable performance are paramount.

In the above diagram, we can see a Hazelcast Cluster of many JVMs. The cluster provides a highly available and on-demand scalable data store and compute grid. Hazelcast automatic replicas ensure that members of the cluster can terminate without loss of data. The cluster is simultaneously able to consume transactions in the millions per second whilst at the same time providing subscribers with predictable query latencies in microseconds.

Satellite to the cluster we observe Hazelcast Clients, these can be a mixture of .NET, Java, C++, REST, Python and Memcache. Worth noting is that as of Hazelcast 3.6 there is now a published open-source binary protocol for communicating with Hazelcast Clusters, which will allow the development of any client platform.

These satellite clients perform a number of tasks such as publishing data into the cluster, whilst other clients are embedded within front office trading systems and even directly into trading desktop GUI. The clients can make use of the standard CRUD APIs via traditional data structures such as Maps, Sets, and Lists. More powerful still is the ability for the clients to observe changes to data in the cluster via the use of Entry Listeners and Continuous Query Caches. Using these tools a trader’s desktop can be updated in a few hundred microseconds.

Finally, it is possible to provide local clusters in all of the global trading centers by making use of Hazelcast WAN Replication. This ensures that the regional data clusters are all kept in sync.

Case Study 2: Banking Collateral Management System

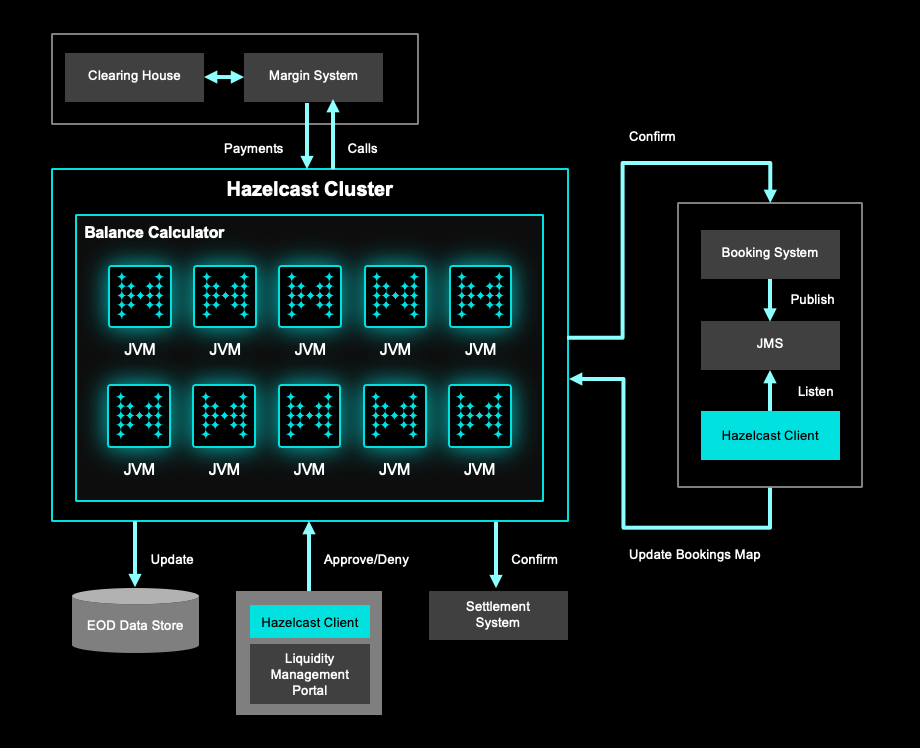

A major investment bank in New York is making use of Hazelcast’s rich event API to wire together the various systems that make up its collateral management process. Today Hazelcast sits at the center of an architecture that integrates Margin Systems, Settlement Systems, Booking Systems and Liquidity Management Portals.

Hazelcast Clients listen to JMS queues for booking messages; these are then relayed to the Hazelcast Cluster by adding records to a bookings map. The update to the bookings map triggers a host of callbacks throughout the central Hazelcast Cluster that begin the processing of various balances, all again held in Hazelcast Distributed Maps. These actions can result in messages being written to the margin system if a call for collateral is required.

Other Hazelcast Clients are embedded in the Liquidity Management Portal GUI, which allows approval or rejection of various bookings, collateral substitutions etc. Once Collateral has been satisfied against a booking, messages are sent out from Hazelcast, back to the Booking System and also onto the Settlement System.

The Collateral System makes extensive use of Hazelcast’s ability to process many hundred thousand messages per second and to reliably process events based on these transactions. Failure to deliver just one of the collateral messages or update any of the data structures within the cluster could lead to exposure or loss in the millions of dollars.

Case Study 3: Foreign Exchange Quotation Management System

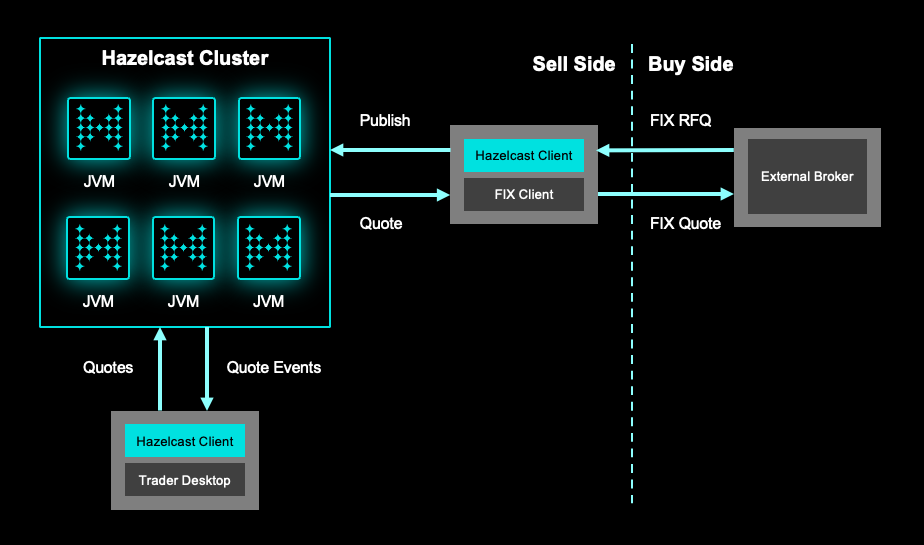

A major investment bank in London uses Hazelcast to manage and interact with external foreign exchange brokerages to provide quotes from internal traders. The system has been in production for over 3 years and is providing 99.9% uptime.

As with the collateral system described above, the Foreign Exchange Quotation Management System makes extensive use of Hazelcast Clients and also the event system that enables pushing of updates in the cluster out to clients. In this system, Hazelcast Clients are used to read inbound quote requests from external brokerages using the FIX protocol. These quote requests are stored in Hazelcast distributed maps.

Trader desktop GUIs have embedded Hazelcast .Net Clients that listen for updates to these Quote Maps, which in turn alert the trader on screen to the request. Once the trader has provided the quote it is saved within the Hazelcast Cluster and also passed back onto the external brokerage.

The quote is valid for a certain time period, and to ensure the quote is removed after this time period, Hazelcast TTL eviction is used on the quote stored in the distributed map. Therefore, if a brokerage attempts to trade on an expired quote it will be rejected.